AWS Certified Developer - Associate (DVA-C02)

- 495 exam-style questions

- Detailed explanations and references

- Simulation and custom modes

- Custom exam settings to drill down into specific topics

- 180-day access period

- Pass or money back guarantee

What is in the package

The tone and tenor of the questions mimic the actual exam. Along with the detailed description and exam tips provided within the explanations, we have extensively referenced AWS documentation to get you up to speed on all domain areas tested for the DVA-C02 exam.

Use our practice exams as the final pit-stop to cross the winning line with absolute confidence and get AWS Certified! Trust our process; you are in good hands.

Complete DVA-C02 domains coverage

CertVista DVA-C02 practice tests thoroughly cover all AWS Developer Associate certification exam domains, ensuring you're well-prepared to pass on the first try.

Development with AWS Services

We cover core development concepts, AWS SDK implementation, and cloud-native application design. This domain focuses on writing, maintaining, and debugging code for cloud applications using AWS services and tools. It includes working with AWS APIs, understanding service integration patterns, and implementing serverless architectures using AWS Lambda. The domain also emphasizes data store selection, implementation of caching strategies, and code-level optimization techniques.

Security

The Security domain addresses fundamental application security concepts and implementation patterns in AWS. This domain explores authentication mechanisms, authorization frameworks, and encryption methodologies for cloud applications. It covers secure coding practices, management of application secrets, and implementation of AWS security services. The domain emphasizes understanding IAM roles, security best practices, and secure data handling in cloud environments.

Deployment

Focuses on modern application deployment strategies and automation practices in AWS. This domain covers the entire deployment lifecycle, including artifact preparation, environment management, and automated testing strategies. It includes understanding CI/CD pipelines, deployment tools like AWS CodePipeline and CodeDeploy, and implementation of various deployment patterns. The domain emphasizes automation, testing methodologies, and deployment best practices.

Troubleshooting and Optimization

The Troubleshooting and Optimization domain explores application troubleshooting methodologies and performance optimization techniques in AWS. This domain covers monitoring implementation, logging strategies, and performance measurement tools. It includes understanding AWS CloudWatch, X-Ray, and other observability services for debugging and optimization. The domain emphasizes root cause analysis, performance tuning, and implementation of cost-effective solutions.

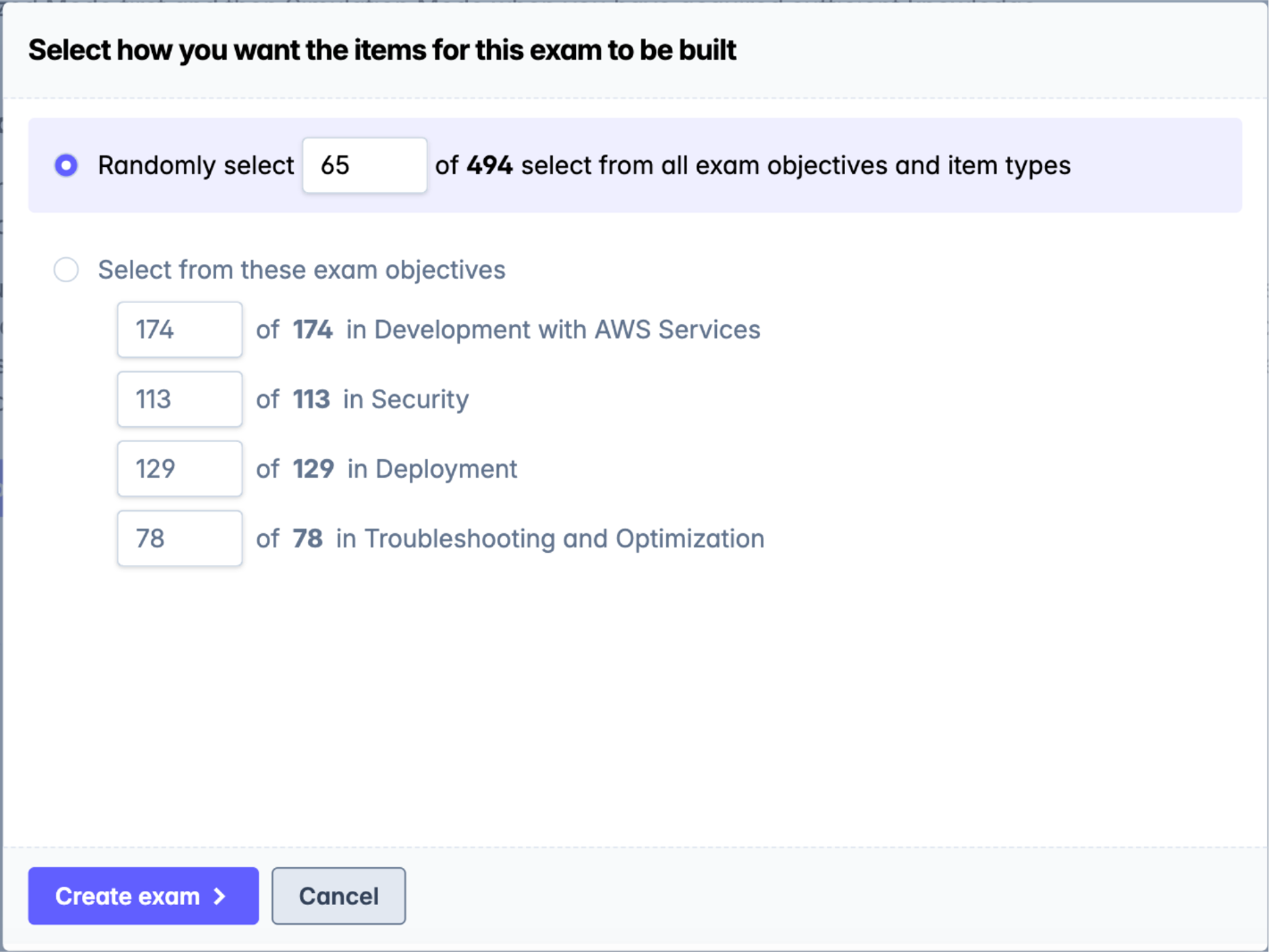

CertVista's Developer Associate question bank contains hundreds of exam-style questions that accurately replicate the certification exam environment. Practice with diverse question types, including multiple-choice, multiple-response, and scenario-based questions focused on real-world cloud development challenges. CertVista exam engine will familiarize you with the real exam environment so you can confidently approach your certification.

Each CertVista question comes with detailed explanations and references. The explanations outline the underlying AWS development principles, reference official AWS documentation, and clarify common coding and implementation misconceptions. You'll learn why the correct answer satisfies the development scenario presented in the question and why the other options do not.

CertVista offers two effective study modes: Custom Mode is for focused practice on specific AWS development domains and is perfect for strengthening knowledge in targeted areas like Lambda functions, API implementation, or security configurations. Simulation Mode replicates the 130-minute exam environment with authentic time pressure and question distribution, building confidence and stamina.

The CertVista analytics dashboard helps you gain clear insights into your AWS Developer Associate exam preparation. You can monitor your performance across all exam domains and identify knowledge gaps in code development, security implementation, deployment strategies, and troubleshooting. This will help you create an efficient study strategy and know when you're ready for certification.

What's in the DVA-C02 exam

The AWS Certified Developer - Associate exam is intended for professionals who perform a development role and have one or more years of hands-on experience developing and maintaining an AWS-based application. By achieving the AWS Certified Developer - Associate certification, you demonstrate an understanding of core AWS services, uses, and basic AWS architecture best practices and demonstrate proficiency in developing, deploying, and debugging cloud-based applications using AWS. Although this exam does not have specific prerequisites, AWS recommends one or more years of hands-on experience developing and maintaining an AWS-based application.

The exam has 65 multiple-choice questions and a duration of 130 minutes. Multiple-choice questions will have one correct response and three incorrect responses or two or more correct responses out of five or more options. You may take the exam at a testing center or through online proctoring. Candidates should visit the AWS Certified Developer Associate page for the most current details and to download the exam guide.

In addition to explaining all the exam domains, CertVista will help you learn the different building blocks of AWS, which will help you create a secure, scalable, cloud-native application to be successful as an AWS developer in the real world.

About CertVista DVA-C02 practice exams

CertVista is your one-stop preparation guide for the latest exam with a focus on hands-on development. Our practice exams are intended for cloud developers, architects, consultants, DevOps engineers, managers, and leaders who are using AWS Cloud to provide services to their end clients. We cover all exam objectives and provide detailed steps to code, build, deploy, migrate, monitor, and debug cloud-native applications using AWS. You will gain the technical knowledge and skills necessary with best practices for building secure, reliable, cloud-native applications using AWS services.

Sample DVA-C02 questions

Get a taste of the AWS Certified Developer - Associate exam with our carefully curated sample questions below. These questions mirror the actual DVA-C02 exam's style, complexity, and subject matter, giving you a realistic preview of what to expect. Each question comes with comprehensive explanations, relevant documentation references, and valuable test-taking strategies from our expert instructors.

While these sample questions provide excellent study material, we encourage you to try our free demo for the complete DVA-C02 exam preparation experience. The demo features our state-of-the-art test engine that simulates the real exam environment, helping you build confidence and familiarity with the exam format. You'll experience timed testing, question marking, and review capabilities – just like the actual certification exam.

An AWS Lambda function accesses two Amazon DynamoDB tables. A developer wants to improve the performance of the Lambda function by identifying bottlenecks in the function.

How can the developer inspect the timing of the DynamoDB API calls?

Add DynamoDB as an event source to the Lambda function.

View the performance with Amazon CloudWatch metrics.

Place an Application Load Balancer (ALB) in front of the two DynamoDB tables.

Inspect the ALB logs.

Limit Lambda to no more than five concurrent invocations.

Monitor from the Lambda console.

Enable AWS X-Ray tracing for the function.

View the traces from the X-Ray service.

The best way to inspect the timing of the DynamoDB API calls made by the Lambda function and identify any bottlenecks is to enable AWS X-Ray tracing for the function. AWS X-Ray allows you to trace requests made by your applications as they travel through various AWS services. By enabling X-Ray, you can get detailed information about the performance of your Lambda function, including the duration of each DynamoDB API call. This information is invaluable for identifying and diagnosing performance bottlenecks.

Other options do not provide a direct method for inspecting the timing of API calls. Adding DynamoDB as an event source or using an ALB is irrelevant to timing DynamoDB API calls. Limiting Lambda concurrency does not help in inspecting API call timing and could adversely affect performance by throttling executions.

References:

An application is processing clickstream data using Amazon Kinesis. The clickstream data feed into Kinesis experiences periodic spikes. The PutRecords API call occasionally fails and the logs show that the failed call returns the response shown below:

{

"FailedRecordCount": 1,

"Records": [

{

"SequenceNumber": "21269319989990063794671296540377848237l",

"ShardId": "shardId-00000000001"

},

{

"ErrorCode": "ProvisionedThroughputExceededException",

"ErrorMessage": "Rate exceeded for shard shardId-00000000001 in stream exampleStreamName under account 123456789."

},

{

"SequenceNumber": "21269319989999637946712965403778482985",

"ShardId": "shardId-00000000002"

}

]

}

Which techniques will help mitigate this exception? (Choose two.)

Use Amazon SNS instead of Kinesis.

Use a PutRecord API instead of PutRecords.

Reduce the frequency and/or size of the requests.

Reduce the number of KCL consumers.

Implement retries with exponential backoff.

In the scenario described, the issue occurs when the PutRecords API call to Amazon Kinesis occasionally fails due to periodic spikes in clickstream data. Such spikes can lead to throttling of requests, causing exceptions. Choosing the right mitigation techniques is crucial for sustaining the application's performance.

Firstly, reducing the frequency and/or size of the requests can be beneficial. During spikes, if the volume of data being sent in a single request is lower or the requests are sent less frequently, it can alleviate issues stemming from exceeding throughput limits. Thus, this technique directly addresses the issue of overwhelming the Kinesis stream.

Secondly, implementing retries with exponential backoff is a common strategy in distributed systems to handle transient faults gracefully. By retrying failed requests with increasing wait times between each attempt, it reduces the likelihood of overwhelming the system further during a temporary spike. This method is particularly effective in situations where failures may be intermittent, providing the system with time to recover.

Using Amazon SNS instead of Kinesis wouldn’t solve the problem at hand, as SNS is not designed to handle high-throughput streaming data ingestion like Kinesis. Moreover, using the PutRecord API instead of PutRecords minimizes the batch efficiency and potentially increases costs due to the 1MB consumption charges on a per-record basis. Reducing the number of KCL (Kinesis Client Library) consumers is unrelated to throttling issues caused by PutRecords calls and therefore does not address the reported issue.

When dealing with transient issues like API throttling in AWS, always consider optimization of request size/frequency and implementing retry mechanisms with backoff strategies. These are common patterns for handling scalability issues effectively.

References:

A company uses AWS to run its learning management system (LMS) application. The application runs on Amazon EC2 instances behind an Application Load Balancer (ALB). The application's domain name is managed in Amazon Route 53. The application is deployed in a single AWS Region, but the company wants to improve application performance for users all over the world.

Which solution will improve global performance with the least operational overhead?

Launch more EC2 instances behind the ALConfigure the ALB to use session affinity (sticky sessions).

Create a Route 53 alias record for the ALB by using a geolocation routing policy.

Deploy the application to multiple Regions across the world.

Create a Route 53 alias record for the ALB by using a latency-based routing policy.

Set up an Amazon CloudFront distribution that uses the ALB as the origin server.

Configure Route 53 to create a DNS alias record that points the application's domain name to the CloudFront distribution URL.

Create an AWS Client VPN endpoint in the VPN.

Instruct users to connect to the VPN to access the application.

Create a Route 53 alias record for the VPN endpoint.

Configure Route 53 to use a geolocation routing policy.

The solution that best improves global performance with the least operational overhead is to set up an Amazon CloudFront distribution using the Application Load Balancer (ALB) as the origin server. CloudFront is a content delivery network (CDN) service that securely delivers data, videos, applications, and APIs to customers globally with low latency and high transfer speeds. Using CloudFront, you can cache content at edge locations close to users, reducing latency and improving application performance for users worldwide.

CloudFront can be configured to point to the ALB as the origin server, enabling requests from users to be routed to the nearest edge location. The edge location will serve the cached content or forward requests to the origin if the content is not cached, ensuring efficient data delivery. This approach has minimal operational overhead because it leverages AWS-managed services to handle regional distribution and cache management.

Deploying the application to multiple Regions and using latency-based routing or launching more EC2 instances in the same Region would increase operational complexity, requiring management of multiple infrastructure components and increased cost without necessarily providing the global performance improvement afforded by using a CDN like CloudFront.

Using an AWS Client VPN with geolocation routing is more suited for secure, private connectivity and does not inherently enhance performance. It also requires additional configuration and user management.

Real-world examples of employing Amazon CloudFront include dynamic web applications where content delivery and caching can significantly enhance user experience by improving load times and providing a seamless browsing experience.

For more details on configuring Amazon CloudFront to work with an Application Load Balancer, consider visiting the AWS CloudFront documentation.

Focus on services that provide global distribution and caching solutions, such as Amazon CloudFront, when considering performance improvements for users around the world. These services typically require less operational overhead compared to deploying resources in multiple Regions.

A developer is building an ecommerce application. When there is a sale event, the application needs to concurrently call three third-party systems to record the sale. The developer wrote three AWS Lambda functions. There is one Lambda function for each third-party system, which contains complex integration logic.

These Lambda functions are all independent. The developer needs to design the application so each Lambda function will run regardless of others' success or failure.

Which solution will meet these requirements?

Publish the sale event from the application to an Application Load Balancer (ALB).

Add the three Lambda functions as ALB targets.

Publish the sale event from the application to an Amazon Simple Queue Service (Amazon SQS) queue.

Configure the three Lambda functions to poll the queue.

Publish the sale event from the application to an AWS Step Functions state machine.

Move the logic from the three Lambda functions into the Step Functions state machine.

Publish the sale event from the application to an Amazon Simple Notification Service (Amazon SNS) topic.

Subscribe the three Lambda functions to be triggered by the SNS topic.

The developer requires a solution where each Lambda function can run independently of the others' success or failure. In such a scenario, publishing the sale event to an Amazon Simple Notification Service (SNS) topic is the most appropriate solution. This design allows different components to subscribe to the SNS topic and be triggered independently when a message is published. It supports a fan-out pattern, which means that each subscriber will receive and process the message independently, even if other subscribers fail or succeed.

Publishing to an Application Load Balancer (ALB) and adding the Lambda functions as targets do not align with the nature of ALBs, which are typically used for routing incoming requests to web servers or containers, and not for triggering Lambda functions directly based on an event. Hence, this approach does not suit the requirement for concurrent and independent processing.

Using Amazon SQS allows for decoupling messages and enables Lambda functions to poll and be triggered by new messages. However, SQS follows a queue paradigm where messages are processed individually, not broadcasted to multiple functions. Specifically, direct polling from a queue would imply a single consumer or a manually implemented mechanism to achieve parallel processing, which is not the scenario posed by SNS.

Implementing an AWS Step Functions state machine could work if coordinating tasks with control flow is necessary. However, it introduces complexity by combining all the logic into one state machine and primarily focuses on handling workflow orchestration rather than simple parallel triggering of independent services.

Examples

A real-world example of this solution would be when an event such as a new sale can generate multiple downstream effects, like updating records in different systems (inventory, accounting, CRM systems), and you want each of these systems to be notified and respond in parallel without being dependent on their individual success or failure.

You can configure SNS to automatically trigger AWS Lambda functions by subscribing the functions to an SNS topic. When the application publishes a message to the SNS topic, all subscribed Lambda functions receive the event, enabling independent processing:

import boto3

sns_client = boto3.client('sns')

response = sns_client.publish(

TopicArn='string',

Message='message',

Subject='subject'

)

References

A developer wants to expand an application to run in multiple AWS Regions. The developer wants to copy Amazon Machine Images (AMIs) with the latest changes and create a new application stack in the destination Region. According to company requirements, all AMIs must be encrypted in all Regions. However, not all the AMIs that the company uses are encrypted. How can the developer expand the application to run in the destination Region while meeting the encryption requirement?

Use AWS Key Management Service (AWS KMS) to enable encryption on the unencrypted AMIs.

Copy the encrypted AMIs to the destination Region.

Use AWS Certificate Manager (ACM) to enable encryption on the unencrypted AMIs.

Copy the encrypted AMIs to the destination Region.

Copy the unencrypted AMIs to the destination Region.

Enable encryption by default in the destination Region.

Create new AMIs, and specify encryption parameters.

Copy the encrypted AMIs to the destination Region.

Delete the unencrypted AMIs.

To expand an application to run in multiple AWS Regions while meeting the requirement of AMIs being encrypted, the developer should create new AMIs specifying the encryption parameters. Once the AMIs are encrypted, they can be copied to the destination Region. This strategy ensures that AMIs comply with the company's encryption requirement before they are replicated.

The use of KMS is essential in enabling encryption on AMIs. However, it's important to note that you cannot directly apply AWS KMS to an existing AMI to encrypt it. Instead, a new AMI needs to be created with encryption settings specified. AWS Certificate Manager (ACM) cannot be used to encrypt AMIs as ACM is primarily meant for managing SSL/TLS certificates, not for encrypting AMIs. Copying unencrypted AMIs to the destination Region and then enabling encryption "by default" is not feasible as encryption must be set during the AMI creation or replication process.

In practice, when creating a new AMI with encryption, a developer typically launches an EC2 instance based on the existing unencrypted AMI, and then creates a new AMI from that instance while specifying the necessary encryption key. After creating encrypted AMIs, they can then be copied to the target Region.

Example:

Suppose a developer has an unencrypted AMI used in the us-east-1 Region and wants to make an encrypted version available in us-west-2. The developer would launch an EC2 instance using the original unencrypted AMI in us-east-1, apply the required updates or changes, and create a new AMI from the instance. When creating the new AMI, the developer specifies an encryption key via AWS KMS. Once the new encrypted AMI is available, it can be copied to us-west-2, thereby enabling the deployment of the entire application stack with encrypted AMIs.

References:

A company is developing a serverless application by using AWS Lambda functions. One of the Lambda functions needs to access an Amazon RDS DB instance. The DB instance is in a private subnet inside a VPC.

The company creates a role that includes the necessary permissions to access the DB instance. The company then assigns the role to the Lambda function. A developer must take additional action to give the Lambda function access to the DB instance.

What should the developer do to meet these requirements?

Assign a public IP address to the DB instance.

Modify the security group of the DB instance to allow inbound traffic from the IP address of the Lambda function.

Set up an AWS Direct Connect connection between the Lambda function and the DB instance.

Configure an Amazon CloudFront distribution to create a secure connection between the Lambda function and the DB instance.

Configure the Lambda function to connect to the private subnets in the VPC.

Add security group rules to allow traffic to the DB instance from the Lambda function.

To give the AWS Lambda function access to an Amazon RDS DB instance located in a private subnet within a VPC, the developer must ensure the Lambda function is configured with VPC access. Assigning the necessary IAM role to the Lambda function is one step, but, for network access to the DB instance, there are additional requirements.

The Lambda function must be configured to run in the same VPC, allowing it to access resources within that VPC, such as the RDS DB instance. This involves specifying the VPC, subnets, and security groups when configuring your Lambda function. Once configured correctly, Lambda will assign Elastic Network Interfaces (ENIs) to operate within the VPC’s subnets, allowing it to communicate with the RDS DB instance over the private IP network without requiring a public IP address.

Moreover, modifying security group rules is crucial. You will need to ensure that the security group associated with the RDS DB instance allows inbound traffic from the Lambda function. Typically, this would mean allowing incoming database traffic on the appropriate port (e.g., TCP 3306 for MySQL) from the Lambda's security group.

Assigning a public IP to the DB instance makes it accessible over the internet, reducing security without helping internal network communication. AWS Direct Connect and Amazon CloudFront are not suitable for this use case because Direct Connect is about establishing physical connections and CloudFront is a content distribution network, neither of which solve the problem of allowing secure access from Lambda to RDS inside a private VPC subnet.

References:

A developer needs to use the AWS CLI on an on-premises development server temporarily to access AWS services while performing maintenance. The developer needs to authenticate to AWS with their identity for several hours.

What is the most secure way to call AWS CLI commands with the developer's IAM identity?

Specify the developer's IAM access key ID and secret access key as parameters for each CLI command

Run the aws configure CLI command.

Provide the developer's IAM access key ID and secret access key.

Specify the developer's IAM profile as a parameter for each CLI command.

Run the get-session-token CLI command with the developer's IAM user.

Use the returned credentials to call the CLI.

The most secure method for temporarily accessing AWS services through the CLI is using temporary security credentials, which can be obtained using the get-session-token command. This command is part of AWS Security Token Service (STS) and provides temporary credentials that are valid for a specified period. This reduces the risk associated with long-lived credentials, as the temporary credentials expire after a set time.

Using temporary credentials is more secure than specifying access keys directly in commands or using the aws configure command, which stores the credentials in plain text on disk. By using get-session-token, you leverage short-lived credentials, enhancing security by minimizing the window in which compromised credentials can be used.

References:

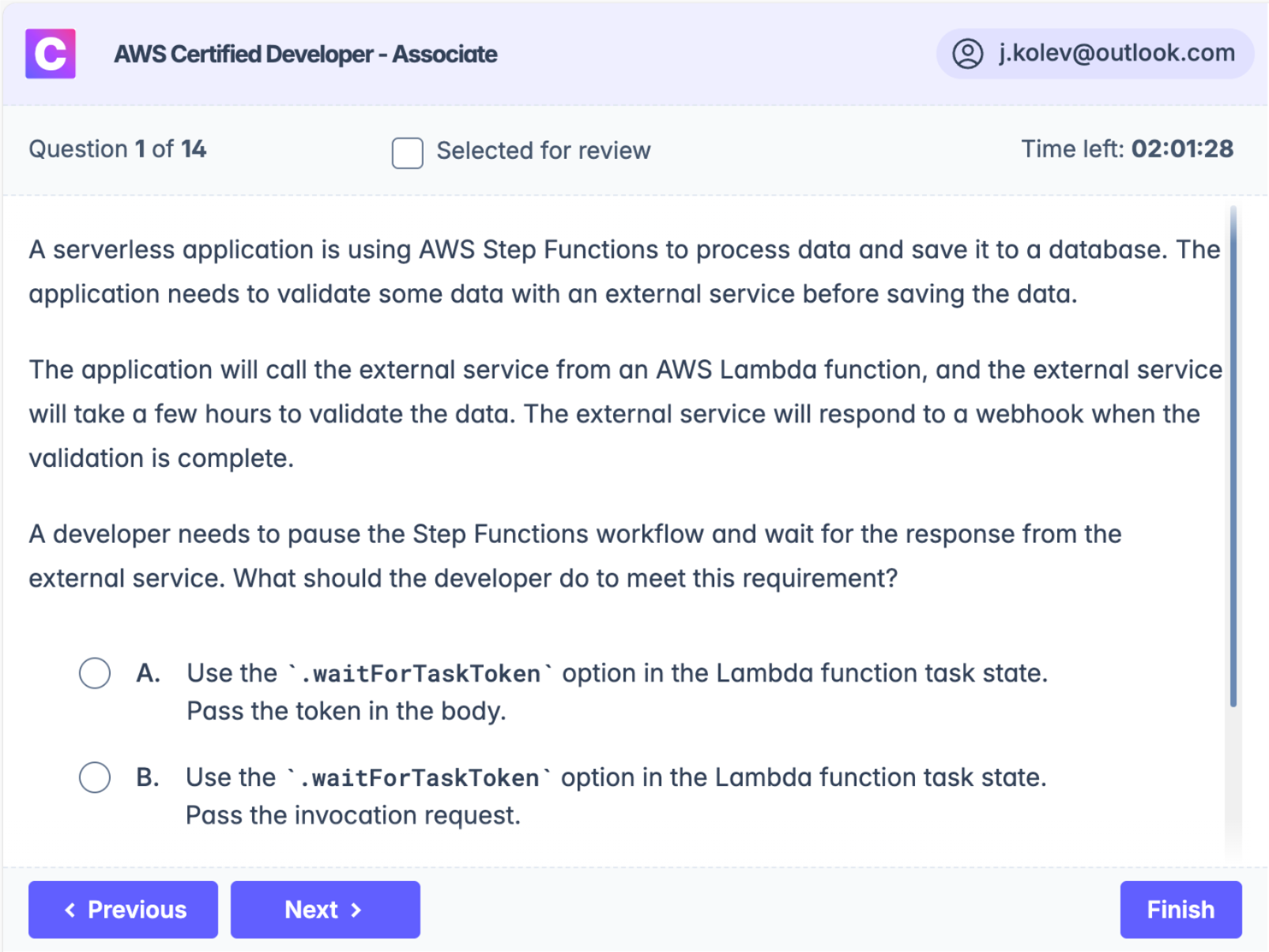

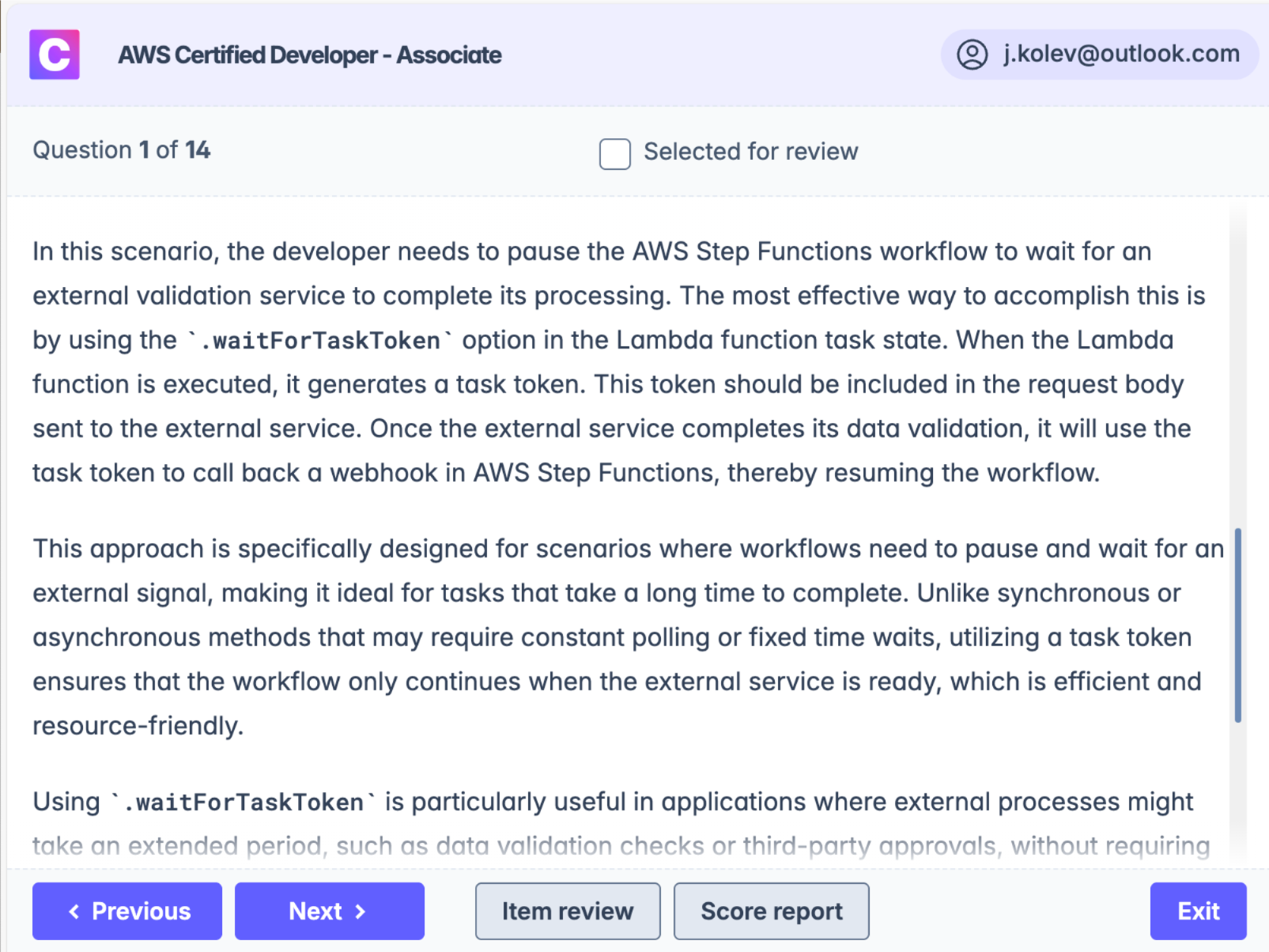

A serverless application is using AWS Step Functions to process data and save it to a database. The application needs to validate some data with an external service before saving the data.

The application will call the external service from an AWS Lambda function, and the external service will take a few hours to validate the data. The external service will respond to a webhook when the validation is complete.

A developer needs to pause the Step Functions workflow and wait for the response from the external service. What should the developer do to meet this requirement?

Use the .waitForTaskToken option in the Lambda function task state.

Pass the token in the body.

Use the .waitForTaskToken option in the Lambda function task state.

Pass the invocation request.

Call the Lambda function in synchronous mode.

Wait for the external service to complete the processing.

Call the Lambda function in asynchronous mode.

Use the Wait state until the external service completes the processing.

In this scenario, the developer needs to pause the AWS Step Functions workflow to wait for an external validation service to complete its processing. The most effective way to accomplish this is by using the .waitForTaskToken option in the Lambda function task state. When the Lambda function is executed, it generates a task token. This token should be included in the request body sent to the external service. Once the external service completes its data validation, it will use the task token to call back a webhook in AWS Step Functions, thereby resuming the workflow.

This approach is specifically designed for scenarios where workflows need to pause and wait for an external signal, making it ideal for tasks that take a long time to complete. Unlike synchronous or asynchronous methods that may require constant polling or fixed time waits, utilizing a task token ensures that the workflow only continues when the external service is ready, which is efficient and resource-friendly.

Using .waitForTaskToken is particularly useful in applications where external processes might take an extended period, such as data validation checks or third-party approvals, without requiring the workflow to be actively polling or consuming resources unnecessarily.

For further details, you can refer to the AWS documentation on AWS Step Functions Task Tokens.

Test takers should remember that AWS Step Functions offers powerful features to manage asynchronous workflows efficiently, making them suitable for integrating with external systems that provide asynchronous responses.

A developer deployed an application to an Amazon EC2 instance. The application needs to know the public IPv4 address of the instance.

How can the application find this information?

Query the instance metadata from http://169.254.169.254/latest/meta-data/.

Query the instance user data from http://169.254.169.254/latest/user-data/.

Query the Amazon Machine Image (AMI) information from http://169.254.169.254/latest/meta-data/ami/.

Check the hosts file of the operating system.

The most appropriate method for an application running on an EC2 instance to find its public IPv4 address is to query the instance metadata. The instance metadata service provides details about the instance, including its public IP address, accessible via HTTP from the URL http://169.254.169.254/latest/meta-data/public-ipv4. This is a well-known method to retrieve various information about the instance directly from within the instance itself.

- Querying the instance user data would not provide the IP address, as user data is typically used for configuration scripts or settings supplied during instance launch.

- Querying AMI information will not yield the public IP address, as it only provides details about the Amazon Machine Image used to launch the instance.

- The hosts file does not contain dynamic information like the public IP address assigned by AWS.

References:

A company has moved a legacy on-premises application to AWS by performing a lift and shift. The application exposes a REST API that can be used to retrieve billing information. The application is running on a single Amazon EC2 instance. The application code cannot support concurrent invocations. Many clients access the API, and the company adds new clients all the time.

A developer is concerned that the application might become overwhelmed by too many requests. The developer needs to limit the number of requests to the API for all current and future clients. The developer must not change the API, the application, or the client code.

What should the developer do to meet these requirements?

Place the API behind an Amazon API Gateway API.

Set the server-side throttling limits.

Place the API behind a Network Load Balancer.

Set the target group throttling limits.

Place the API behind an Application Load Balancer.

Set the target group throttling limits.

Place the API behind an Amazon API Gateway API.

Set the per-client throttling limits.

Placing the API behind an Amazon API Gateway and setting server-side throttling limits is an effective way to control the number of requests to the API without modifying the application or client code. API Gateway provides a robust mechanism for throttling requests, which can be applied globally to limit the total number of requests per second to the API. This helps protect the backend system from being overwhelmed by excessive requests, ensuring stability and consistent performance.

Amazon API Gateway: This service allows you to create, publish, maintain, monitor, and secure APIs. It can handle all the tasks involved in accepting and processing up to hundreds of thousands of concurrent API calls, including traffic management, authorization and access control, monitoring, and API version management. By setting server-side throttling limits, the company can ensure that the API does not receive more requests than it can handle at any given time.

Network Load Balancer and Application Load Balancer: These services are typically used for distributing incoming application or network traffic across multiple targets, such as EC2 instances. They do not inherently provide request throttling features like API Gateway does. Throttling at the load balancer level would require additional configurations and may not be as straightforward or effective as using API Gateway for this specific need.

Per-client throttling: While also a feature of API Gateway, focusing on server-side throttling in this scenario is more appropriate because it limits total requests to the API rather than setting specific limits per client. This approach ensures that the application is protected against a sudden surge in requests overall, which is the primary concern here.

In the real world:

A real-world example of using API Gateway for throttling can be found in scenarios where applications need to ensure a consistent quality of service when accessed by a large number of clients. For instance, a billing service API that needs to prevent excessive access that could lead to system overload would benefit from server-side throttling to maintain system stability.

Remember that API Gateway is often used for managing API traffic and can provide features like throttling, caching, and monitoring, which are essential for protecting backend services from being overwhelmed.

References:

Frequently Asked Questions

The AWS Certified Developer Associate (DVA-C02) exam is designed for individuals who perform a developer role. It validates a candidate's ability to design, develop, and deploy cloud-based solutions using AWS services.

The exam covers a range of topics, including AWS core services, security, development with AWS SDKs, application deployment, and troubleshooting. It consists of multiple-choice and multiple-response questions.

The exam includes 65 questions, combining scored and unscored items. To pass, candidates must achieve a minimum score of 720 out of 1000.

Preparation can include studying the official AWS exam guide, using AWS Skillbuilder resources, reviewing AWS whitepapers, and practicing with CertVista practice tests.

While there are no official prerequisites, it is recommended that candidates have at least one year of hands-on experience developing and maintaining an AWS-based application.

The exam is a proctored, timed test that can be taken at a testing center or online. It features multiple-choice and multiple-response questions to assess a candidate's AWS knowledge and skills.

Using CertVista practice tests can prepare you for the DVA-C02 exam if you focus on understanding core concepts rather than memorizing answers. We recommend achieving consistent scores of 90-95% or higher on the practice tests while explaining why each answer is correct or incorrect.

We intentionally designed our practice questions to be slightly more challenging than the actual exam questions. This approach helps build a deeper understanding of the concepts and creates a stronger knowledge foundation. When you encounter actual exam questions, you'll be better prepared and more confident in your answers.

Our question pools undergo regular updates to align with the latest exam patterns and AWS service changes. We maintain daily monitoring of exam changes and promptly incorporate new content to ensure our practice tests remain current and relevant.

Each practice question includes comprehensive explanations covering correct and incorrect answer choices. Our explanations provide detailed technical information, relevant AWS service details, and real-world context. These explanations serve as adequate learning resources, helping you understand the correct answer, why it's correct, and how it applies to cloud computing scenarios.