AWS Certified Data Engineer - Associate (DEA-C01)

- 235 exam-style questions

- Detailed explanations and references

- Simulation and custom modes

- Custom exam settings to drill down into specific topics

- 180-day access period

- Pass or money back guarantee

What is in the package

The tone and tenor of our questions mimic the actual exam. Along with the detailed description and exam tips provided within the explanations, we have extensively referenced AWS documentation to get you up to speed on all domain areas tested for the DEA-C01 exam.

Beyond exam preparation, our practice exams serve as a lasting reference guide. From setting up secure data pipelines to monitoring and troubleshooting operations, you will find the knowledge and skills needed for real-world scenarios. Whether you're an aspiring data engineer or a seasoned professional, CertVista DEA-C01 will equip you with the tools to advance your career and excel in the ever-growing field of data engineering.

Complete DEA-C01 domains coverage

CertVista DEA-C01 is organized into four domains that closely relate to the topics necessary to cover the Certified Data Engineer exam.

Data Ingestion and Transformation

CertVista DEA-C01 covers selecting and implementing appropriate AWS services (e.g., Kinesis, DMS, Glue, Snow Family) to ingest data from various sources based on velocity and volume requirements. It assesses the ability to design, build, and optimize data transformation pipelines using services like AWS Glue, EMR, and Lambda to process, partition, format (e.g., Parquet, Avro), and validate data for analytical use cases, including schema management.

Data Store Management

Here, we focus on selecting, configuring, and managing suitable AWS data storage solutions (e.g., S3, Redshift, DynamoDB, RDS) aligned with access patterns, data structure, and cost considerations. It includes implementing data lifecycle policies, applying optimal partitioning and file formats, managing data catalogs using AWS Glue Data Catalog, and optimizing storage for query performance and cost-efficiency.

Data Operations and Monitoring

You'll learn how to assess the ability to automate, orchestrate, monitor, and troubleshoot data processing workflows using services like Step Functions, Glue Workflows, and Managed Workflows for Apache Airflow. Key skills include implementing monitoring, logging, and alerting with CloudWatch, diagnosing pipeline failures and performance issues, managing pipeline deployments, and ensuring operational resilience and efficiency.

Data Security and Governance

CertVista DEA-C01 covers implementing security controls and data governance practices across data pipelines and storage. It includes applying security mechanisms like IAM for access control, KMS for encryption, and network security configurations. Candidates must demonstrate proficiency in managing data permissions (potentially with Lake Formation), ensuring compliance, implementing data cataloging, monitoring data quality, and applying data privacy techniques.

Our practice exams mirror the DEA-C01 format, pacing, and pressure, so you can build real test-day stamina. The interface, timers, and review workflow feel familiar, helping you manage time, flag questions, and make confident passes through the exam.

Questions are crafted to match the tone and difficulty of the real exam—scenario-driven, multi-step, and sprinkled with plausible distractors. We cover every domain and subtopic from the current exam guide, so you’re not surprised by how content is distributed.

You can switch between full timed exams and untimed practice modes. Choose end-of-exam scoring to simulate the real experience or immediate feedback when you’re focused on learning.

Every question includes a clear, step-by-step rationale: why the correct option is right, why the others aren’t, and what clues in the scenario point you there. We emphasize trade-offs across cost, performance, scalability, reliability, and security—the same thinking the exam expects.

We unpack gotchas and edge cases you’re likely to see in data engineering work: partitioning pitfalls, schema evolution, workload patterns, data movement choices, and service limits. Where helpful, we reference official docs and best practices so you can go deeper with confidence.

The goal is durable understanding. By the time you’ve reviewed an explanation set, you’ll recognize patterns and apply them, not just memorize answers.

A simple dashboard shows your accuracy, speed, and confidence by domain and subtopic over time. You’ll quickly see where you’re strong and where you need more reps, with trends that prove your readiness is moving in the right direction.

Targeted practice turns insights into action. Generate custom quizzes from weak areas, mix in recently missed questions, and dial difficulty up or down. Optional spaced repetition resurfaces concepts right before you’re likely to forget them.

What's in the DEA-C01 exam

The AWS Certified Data Engineer—Associate exam validates the skills and expertise required to build, maintain, and optimize data processing systems on the AWS platform. It assesses the candidate's ability to manage data throughout its lifecycle, including ingestion, transformation, storage, and analysis. The exam is particularly relevant for professionals creating robust, scalable, secure data infrastructure critical for data-driven decision-making and AI-powered solutions.

This certification covers a range of topics, including streaming and batch data ingestion, automated data pipeline construction, data transformation techniques, storage services, and database management. Security and governance, such as encryption, masking, and access controls, are also critical components. Additionally, the exam tests knowledge of advanced topics like data cataloging, monitoring, auditing, and troubleshooting data operations, ensuring candidates can maintain efficient systems.

DEA-C01 Stats

The AWS Certified Data Engineer—Associate (DEA-C01) exam has a pass or fail designation. It is scored against a minimum standard established by AWS professionals who follow certification industry best practices and guidelines. Your results for the exam are reported as a scaled score of 100–1,000. The minimum passing score is 720.

What are the DEA-C01 Questions Like?

There are two types of questions on the exam: multiple choice, which has one correct response and three incorrect responses, or distractors, and multiple responses, which have two (or more) correct responses out of five or more response options.

During the exam, you will be asked to choose the best answer for scenarios to complete tasks to design and implement systems on AWS. The questions' overall length, complexity, and difficulty are longer and more complicated than what you expect from an associate-level certification exam. Most questions involve lengthy scenarios, usually several sentences to a couple of paragraphs.

Most of the answer choices will be several sentences long as well. So, take your time reading through these longer questions, and be sure to process every word you read in detail. Be on the lookout for repeated sentences across the possible answers with just a word or two changed.

Those one or two words can make all the difference when determining which answer is correct and which might be a distractor. Always do your best to eliminate these distractors as early as possible so you can focus more on the plausible answers and select the best answer to each question.

Like all exams, the AWS Certified Data Engineer – Associate certification from AWS is updated periodically and may eventually be retired or replaced. At some point, after AWS no longer offers this exam, the old editions of our practice exams will be retired.

AWS Certified Data Engineer – Associate Exam (DEA-C01) Objectives

The following table provides a breakdown of this book's exam coverage, showing you the weight of each section and the chapter where each objective or sub-objective is covered:

“Domain 1: Data Ingestion and Transformation 34%”

Excerpt From AWS Certified Data Engineer Study Guide Syed Humair This material may be protected by copyright.

| Subject Area | % of Exam |

|---|---|

| Domain 1: Data Ingestion and Transformation | 34% |

| Domain 2: Data Store Management | 26% |

| Domain 3: Data Operations and Support | 22% |

| Domain 4: Data Security and Governance | 18% |

Domain 1: Data Ingestion and Transformation (34%)

This domain focuses on getting data into AWS and preparing it for analysis or storage. It covers selecting appropriate AWS services (like Kinesis for streaming, DMS for database migration, Glue ETL, Snow Family for bulk transfer, SFTP, DataSync) for various data sources (databases, streams, files, APIs) and velocities (batch, near-real-time, real-time). Key tasks include configuring ingestion pipelines, handling different data formats (JSON, CSV, Parquet, Avro), managing schemas, and validating incoming data.

Furthermore, this domain emphasizes data transformation techniques using AWS Glue, EMR, Lambda, and Kinesis Data Analytics services. This involves cleaning, normalizing, enriching, partitioning, and converting data into optimized formats (like Parquet or ORC) suitable for data lakes or warehouses. Understanding ETL (Extract, Transform, Load) vs. ELT (Extract, Load, Transform) patterns and optimizing transformation jobs for performance and cost are crucial.

Domain 2: Data Store Management (26%)

This domain covers the effective storage and management of data within the AWS ecosystem. It requires understanding the characteristics and use cases of various AWS storage options, including object storage (S3), data warehouses (Redshift), NoSQL databases (DynamoDB), relational databases (RDS/Aurora), and data lake storage patterns. Candidates need to know how to choose the right storage solution based on data structure, access patterns, query requirements, durability, and cost. Key activities include managing data lifecycle policies (e.g., S3 Intelligent-Tiering, Glacier), implementing partitioning strategies in data lakes and data warehouses for query optimization, defining and managing data catalogs (using AWS Glue Data Catalog), understanding data modeling concepts, and optimizing storage for cost and performance (e.g., compression, file formats). Ensuring data is organized, accessible, and efficiently stored is the core focus.

Domain 3: Data Operations and Monitoring (22%)

This domain focuses on the operational aspects of maintaining, monitoring, and ensuring the reliability of data pipelines and workflows. It involves using AWS services to orchestrate data processing jobs, manage dependencies, and schedule executions (e.g., Step Functions, Glue Workflows, and Managed Workflows for Apache Airflow). Monitoring pipeline health, performance, and data quality is critical, utilizing services like CloudWatch (Logs, Metrics, Alarms) and potentially AWS Glue Data Quality.

Candidates must be proficient in troubleshooting pipeline failures, diagnosing performance bottlenecks, and implementing logging and alerting mechanisms. This domain also covers automating deployment and operational tasks, managing pipeline versions, optimizing resource utilization, and implementing strategies for handling failures and ensuring data processing resilience and efficiency.

Domain 4: Data Security and Governance (18%)

This domain covers the critical aspects of securing data assets and implementing data governance practices within AWS. It includes applying security best practices such as managing authentication and authorization using IAM (roles, policies), implementing encryption both at rest (using KMS, S3 server-side encryption) and in transit (TLS/SSL), and configuring network security (VPCs, security groups, endpoints). Securing access to data stores and processing services is paramount. Governance aspects involve managing data access controls at granular levels (e.g., using Lake Formation permissions), implementing data cataloging for discovery and lineage, defining and monitoring data quality rules, and ensuring compliance with relevant regulations and organizational policies. Data privacy techniques (like masking or tokenization), data retention policies, and audit logging are essential components of this domain.

Sample DEA-C01 questions

Get a taste of the AWS Certified Data Engineer - Associate exam with our carefully curated sample questions below. These questions mirror the actual DEA-C01 exam's style, complexity, and subject matter, giving you a realistic preview of what to expect. Each question comes with comprehensive explanations, relevant documentation references, and valuable test-taking strategies from our expert instructors.

While these sample questions provide excellent study material, we encourage you to try our free demo for the complete DEA-C01 exam preparation experience. The demo features our state-of-the-art test engine that simulates the real exam environment, helping you build confidence and familiarity with the exam format. You'll experience timed testing, question marking, and review capabilities – just like the actual certification exam.

A data engineer needs to create an AWS Lambda function that converts the format of data from .csv to Apache Parquet. The Lambda function must run only if a user uploads a .csv file to an Amazon S3 bucket.

Which solution will meet these requirements with the least operational overhead?

Create an S3 event notification that has an event type of s3:ObjectTagging:* for objects that have a tag set to .csv. Set the Amazon Resource Name (ARN) of the Lambda function as the destination for the event notification.

Create an S3 event notification that has an event type of s3:ObjectCreated:*. Use a filter rule to generate notifications only when the suffix includes .csv. Set an Amazon Simple Notification Service (Amazon SNS) topic as the destination for the event notification. Subscribe the Lambda function to the SNS topic.

Create an S3 event notification that has an event type of s3:*. Use a filter rule to generate notifications only when the suffix includes .csv. Set the Amazon Resource Name (ARN) of the Lambda function as the destination for the event notification.

Create an S3 event notification that has an event type of s3:ObjectCreated:*. Use a filter rule to generate notifications only when the suffix includes .csv. Set the Amazon Resource Name (ARN) of the Lambda function as the destination for the event notification.

Creating an S3 event notification configured with the s3:ObjectCreated:* event type ensures that the notification triggers whenever a new object is successfully uploaded to the bucket (including Put, Post, Copy, and Multipart Upload completion). Using a suffix filter rule for .csv ensures that the notification is generated, and thus the Lambda function is invoked, only when the uploaded object's key name ends with .csv. Setting the Lambda function's ARN directly as the destination for the event notification provides a direct invocation mechanism without intermediate services. This combination precisely meets the requirements (trigger on CSV upload only) with the minimum number of AWS resources and configuration steps, resulting in the least operational overhead.

Configuring the event type s3:ObjectTagging:* is incorrect because this event triggers when tags are added or modified on an S3 object, not when the object is initially created or uploaded. The requirement is to trigger the Lambda function upon file upload.

Using the event type s3:* is inefficient and overly broad. While it includes object creation events, it also includes many other events like object deletion (s3:ObjectRemoved:*), tagging (s3:ObjectTagging:*), etc. Although the suffix filter would prevent the Lambda from processing non-CSV files, the event notification system would still generate notifications for non-creation events involving CSV files (like deletion or tagging), potentially causing unnecessary triggers or requiring more complex handling logic within the Lambda function if it were invoked for events other than creation. Using s3:ObjectCreated:* is more specific and efficient.

Introducing an Amazon SNS topic as an intermediary between the S3 event notification and the Lambda function adds an extra component to manage (the SNS topic itself, its access policies, and the Lambda subscription to the topic). While this architecture is valid and useful for scenarios requiring fan-out to multiple subscribers or decoupling, it increases the operational overhead compared to directly invoking the Lambda function from the S3 event notification. The requirement specifically asks for the solution with the least operational overhead.

For 'least operational overhead' questions involving AWS service integrations, always favor the most direct path allowed by the services. Avoid introducing intermediate services like SQS or SNS unless they are explicitly required for features like decoupling, buffering, or fan-out, which are not mentioned in this scenario. Also, be precise with event types (e.g., s3:ObjectCreated:* vs. s3:*) to minimize unnecessary triggers.

A marketing company collects clickstream data. The company sends the clickstream data to Amazon Kinesis Data Firehose and stores the clickstream data in Amazon S3. The company wants to build a series of dashboards that hundreds of users from multiple departments will use.

The company will use Amazon QuickSight to develop the dashboards. The company wants a solution that can scale and provide daily updates about clickstream activity.

Which combination of steps will meet these requirements most cost-effectively? (Choose two.)

Access the query data through a QuickSight direct SQL query.

Use Amazon Athena to query the clickstream data.

Use Amazon S3 analytics to query the clickstream data.

Access the query data through QuickSight SPICE (Super-fast, Parallel, In-memory Calculation Engine). Configure a daily refresh for the dataset.

Use Amazon Redshift to store and query the clickstream data.

First, consider the query engine. The data resides in Amazon S3. Amazon Athena is a serverless query service that allows you to analyze data directly in Amazon S3 using standard SQL. It scales automatically and you pay only for the queries you run, making it highly cost-effective for querying data stored in S3, especially when query patterns might vary. Loading the data into Amazon Redshift would incur additional ETL effort and the costs associated with running a Redshift cluster (provisioned or serverless), which might not be the most cost-effective approach compared to querying directly from S3 with Athena. Amazon S3 Analytics is used for analyzing storage access patterns, not querying the data content itself.

Second, consider the QuickSight data access method. QuickSight offers two modes: Direct Query and SPICE (Super-fast, Parallel, In-memory Calculation Engine).

- Direct Query mode runs queries against the underlying data source (Athena, in this case) in real-time as users interact with the dashboard. With hundreds of users, this could lead to a high volume of Athena queries, potentially increasing costs significantly (Athena charges per query/data scanned) and potentially impacting dashboard performance.

- SPICE imports a copy of the data into a highly optimized, in-memory cache within QuickSight. Queries from dashboards then hit the SPICE layer, providing fast performance and reducing the query load on the underlying source. SPICE datasets can be scheduled to refresh periodically (e.g., daily). For a large number of users accessing the same dataset, SPICE is generally more cost-effective and provides better performance than Direct Query mode, especially when the underlying source is pay-per-query like Athena. The requirement for daily updates aligns perfectly with SPICE's scheduled refresh capability.

Therefore, the most cost-effective and scalable solution involves using Amazon Athena to query the clickstream data directly from S3 and accessing this data in QuickSight through SPICE, configured with a daily refresh.

When designing QuickSight solutions with many users and pay-per-query data sources like Athena, strongly consider SPICE. SPICE optimizes for dashboard performance and cost by caching data and reducing queries to the source. Match the SPICE refresh frequency to the required data freshness (e.g., daily updates = daily SPICE refresh).

References:

A data engineer needs to create an Amazon Athena table based on a subset of data from an existing Athena table named cities_world. The cities_world table contains cities that are located around the world. The data engineer must create a new table named cities_us to contain only the cities from cities_world that are located in the US.

Which SQL statement should the data engineer use to meet this requirement?

UPDATE cities_usa SET (city, state) = (SELECT city, state FROM cities_world WHERE country=’usa’);

INSERT INTO cities_usa SELECT city, state FROM cities_world WHERE country=’usa’;

MOVE city, state FROM cities_world TO cities_usa WHERE country=’usa’;

INSERT INTO cities_usa (city,state) SELECT city, state FROM cities_world WHERE country=’usa’;

The requirement is to populate a new table, cities_us, with a specific subset of data (cities located in the US) from an existing table, cities_world. While the question uses the word "create," the provided SQL options focus on data manipulation (UPDATE, INSERT, MOVE) rather than table creation (CREATE TABLE). The most direct way to create a table based on a query result in Athena is using CREATE TABLE AS SELECT (CTAS). Since CTAS is not offered as an option, the question likely assumes the table cities_us already exists with the appropriate columns (city, state), and the task is to insert the relevant data into it.

The standard SQL syntax for inserting data into a table based on a selection from another table is INSERT INTO ... SELECT ....

The statement INSERT INTO cities_usa (city,state) SELECT city, state FROM cities_world WHERE country=’usa’; correctly performs this operation:

INSERT INTO cities_usa (city,state): Specifies the target table (cities_usa) and the columns (city,state) into which data will be inserted.SELECT city, state FROM cities_world: Selects the required columns from the source table (cities_world).WHERE country=’usa’: Filters the rows fromcities_worldto include only those where the country is 'usa'.

This statement effectively copies the city and state for all US cities from the source table into the target table.

The statement UPDATE cities_usa ... is incorrect because UPDATE modifies existing rows, it does not insert new rows.

The statement MOVE ... is not valid SQL syntax for this purpose.

The statement INSERT INTO cities_usa SELECT city, state FROM cities_world WHERE country=’usa’; is also syntactically valid if the columns city and state are the first two columns (or the only columns) in the cities_usa table and match the order in the SELECT list. However, explicitly listing the target columns, as done in the correct answer, is generally considered better practice as it is more robust to changes in table structure.

Understand the difference between CREATE TABLE AS SELECT (CTAS), which creates and populates a table in one step, and INSERT INTO ... SELECT, which populates an existing table. If CTAS is not an option and the goal is to have a table with a subset of data, look for the INSERT INTO ... SELECT pattern, assuming the table structure exists.

A data engineer needs to create a new empty table in Amazon Athena that has the same schema as an existing table named old_table.

Which SQL statement should the data engineer use to meet this requirement?

CREATE TABLE new_table (LIKE old_table);

CREATE TABLE new_table AS (SELECT * FROM old_table) WITH NO DATA;

INSERT INTO new_table SELECT * FROM old_table;

CREATE TABLE new_table AS SELECT * FROM old_tables;

Amazon Athena supports the CREATE TABLE AS SELECT (CTAS) statement, which creates a new table based on the results of a SELECT query. The schema of the new table is derived from the columns and data types returned by the SELECT statement.

To create the table with the same schema but without copying the data, Athena provides the WITH NO DATA clause for CTAS statements. The statement CREATE TABLE new_table AS SELECT * FROM old_table WITH NO DATA; instructs Athena to:

- Determine the schema by evaluating the

SELECT * FROM old_tablequery. - Create

new_tablewith that derived schema. - Skip the execution of the

SELECTquery for data population due to theWITH NO DATAclause.

This results in a new, empty table (new_table) with the identical schema as old_table.

The statement CREATE TABLE new_table (LIKE old_table); is not supported syntax in Athena for creating a table based on another table's schema. The LIKE clause in Athena's CREATE TABLE is used differently, typically related to SerDe properties when creating tables based on files.

The statement INSERT INTO new_table SELECT * FROM old_table; is used to copy data into an existing table (new_table) and does not create the table itself.

The statement CREATE TABLE new_table AS SELECT * FROM old_table; is a standard CTAS statement that does copy all data from old_table into new_table, which violates the requirement for the new table to be empty.

Remember the CREATE TABLE AS SELECT (CTAS) pattern in Athena. Use the WITH NO DATA clause when you need to duplicate a table's structure without duplicating its contents.

Files from multiple data sources arrive in an Amazon S3 bucket on a regular basis. A data engineer wants to ingest new files into Amazon Redshift in near real time when the new files arrive in the S3 bucket.

Which solution will meet these requirements?

Use the query editor v2 to schedule a COPY command to load new files into Amazon Redshift.

Use S3 Event Notifications to invoke an AWS Lambda function that loads new files into Amazon Redshift.

Use the zero-ETL integration between Amazon Aurora and Amazon Redshift to load new files into Amazon Redshift.

Use AWS Glue job bookmarks to extract, transform, and load (ETL) load new files into Amazon Redshift.

Amazon S3 Event Notifications provide a mechanism to automatically trigger downstream actions when specific events occur within an S3 bucket, such as the creation of a new object (s3:ObjectCreated:*). You can configure S3 Event Notifications to send a message to various targets, including an AWS Lambda function.

When a new file arrives in the designated S3 bucket, S3 will automatically invoke the configured Lambda function, passing event details that include the bucket name and the object key (file name). The Lambda function can then use this information to connect to the Amazon Redshift cluster (e.g., using the Redshift Data API or a standard database driver) and execute a COPY command to load the specific new file into the target Redshift table. This approach provides a highly responsive, serverless, and near real-time ingestion pipeline.

Scheduling a COPY command using the query editor v2 is time-based, not event-based. It runs on a fixed schedule (e.g., every hour), leading to potential delays between file arrival and ingestion, thus not meeting the near real-time requirement.

The zero-ETL integration is specifically designed for replicating data changes from Amazon Aurora or Amazon RDS for MySQL databases to Amazon Redshift, not for ingesting files from S3.

AWS Glue job bookmarks are used to track processed data, enabling Glue jobs to process only new data since the last run. While Glue jobs can be triggered by S3 events (typically via EventBridge), running a full Glue ETL job for each arriving file might introduce latency and overhead compared to a lightweight Lambda function executing a COPY command, making it less suitable for near real-time, per-file ingestion.

For near real-time processing triggered by S3 object creation, the combination of S3 Event Notifications and AWS Lambda is a standard and effective pattern. Lambda's low startup time and event-driven nature make it ideal for reacting quickly to file arrivals.

A retail company uses an Amazon Redshift data warehouse and an Amazon S3 bucket. The company ingests retail order data into the S3 bucket every day.

The company stores all order data at a single path within the S3 bucket. The data has more than 100 columns. The company ingests the order data from a third-party application that generates more than 30 files in CSV format every day. Each CSV file is between 50 and 70 MB in size.

The company uses Amazon Redshift Spectrum to run queries that select sets of columns. Users aggregate metrics based on daily orders. Recently, users have reported that the performance of the queries has degraded. A data engineer must resolve the performance issues for the queries.

Which combination of steps will meet this requirement with LEAST developmental effort? (Choose two.)

Load the JSON data into the Amazon Redshift table in a SUPER type column.

Configure the third-party application to create the files in JSON format.

Partition the order data in the S3 bucket based on order date.

Develop an AWS Glue ETL job to convert the multiple daily CSV files to one file for each day.

Configure the third-party application to create the files in a columnar format.

The scenario describes performance degradation for Amazon Redshift Spectrum queries against CSV files stored in Amazon S3. The queries select subsets of columns and aggregate metrics based on daily orders. The data includes many columns (>100), and all files are stored in a single path. To improve query performance with minimal development effort, we need to address the inefficiencies in data format and layout.

Columnar Format: CSV is a row-based format. When Spectrum queries select only a subset of columns from a wide table (100+ columns), it must still read entire rows, including data from columns not requested. Switching to a columnar format like Apache Parquet or ORC allows Spectrum to read data only for the columns specified in the query, significantly reducing the amount of data scanned and improving performance. Configuring the third-party application to generate files directly in a columnar format, if supported, would be the most efficient way to achieve this, minimizing downstream processing effort.

Partitioning: The queries aggregate metrics based on daily orders, implying frequent filtering or grouping by date. Storing all files in a single path forces Spectrum to list and potentially scan all files, even if the query only targets a specific date range. Partitioning the data in the S3 bucket based on the order date (e.g., creating prefixes like

s3://your-bucket/orders/order_date=YYYY-MM-DD/) allows Spectrum to perform partition pruning. Spectrum can identify and scan only the partitions (and files within them) that match the date predicates in theWHEREclause, drastically reducing the data scanned for date-specific queries.

Combining these two approaches addresses the key performance bottlenecks:

- Using a columnar format optimizes queries that select specific columns.

- Partitioning by date optimizes queries that filter or aggregate by date.

Let's consider the other options:

- Configuring the application to create files in JSON format does not solve the performance issue for selecting subsets of columns, as JSON is also row-oriented.

- Loading JSON data into a Redshift SUPER column involves moving data from S3 into Redshift's local storage, which changes the architecture away from Redshift Spectrum and adds complexity.

- Developing an AWS Glue ETL job to consolidate multiple daily files into one large file might offer minor benefits by reducing S3 listing overhead, but it doesn't address the core inefficiencies of the row-based format or lack of partitioning. It also adds development effort.

Therefore, partitioning the data by order date in S3 and configuring the source application to use a columnar format are the most effective steps to improve Redshift Spectrum query performance with potentially the least development effort.

When optimizing query performance for Redshift Spectrum or Athena against data in S3, always consider data format and partitioning. Columnar formats (Parquet, ORC) are best for queries selecting subsets of columns, especially with wide tables. Partitioning is crucial for filtering data based on low-cardinality columns frequently used in WHERE clauses (like date, region, etc.). Aim to implement these optimizations as close to the data source as possible to minimize downstream ETL effort.

A company receives a data file from a partner each day in an Amazon S3 bucket. The company uses a daily AWS Glue extract, transform, and load (ETL) pipeline to clean and transform each data file. The output of the ETL pipeline is written to a CSV file named Daily.csv in a second S3 bucket.

Occasionally, the daily data file is empty or is missing values for required fields. When the file is missing data, the company can use the previous day’s CSV file.

A data engineer needs to ensure that the previous day's data file is overwritten only if the new daily file is complete and valid.

Which solution will meet these requirements with the least effort?

Run a SQL query in Amazon Athena to read the CSV file and drop missing rows. Copy the corrected CSV file to the second S3 bucket.

Use AWS Glue Studio to change the code in the ETL pipeline to fill in any missing values in the required fields with the most common values for each field.

Configure the AWS Glue ETL pipeline to use AWS Glue Data Quality rules. Develop rules in Data Quality Definition Language (DQDL) to check for missing values in required fields and empty files.

Invoke an AWS Lambda function to check the file for missing data and to fill in missing values in required fields.

AWS Glue Data Quality is a feature designed specifically for validating data within AWS Glue ETL jobs. It allows defining rules using the Data Quality Definition Language (DQDL) to assess various aspects of data quality.

By integrating the EvaluateDataQuality transform into the existing Glue ETL job, the data engineer can:

- Define DQDL rules to check if the dataset is empty (e.g.,

RowCount > 0). - Define DQDL rules to check for completeness in required fields (e.g.,

IsComplete "required_column_name"). - Configure the Glue job's logic based on the outcome of the data quality evaluation. If the rules pass, the job proceeds to write the transformed data to the output S3 bucket, overwriting

Daily.csv. If the rules fail, the job can be configured to stop or skip the final write step, effectively leaving the previous day'sDaily.csvin place.

This approach uses a built-in AWS Glue feature, requires configuration of rules rather than extensive custom coding, and integrates the validation directly into the existing ETL pipeline. This represents the least implementation effort compared to other options.

Using Amazon Athena adds an extra processing step after the Glue job. Modifying the Glue pipeline to fill missing values doesn't meet the requirement of checking validity and preventing overwrites. Invoking an AWS Lambda function requires writing custom validation code and potentially managing orchestration, increasing the effort compared to using the native Glue Data Quality feature.

Leverage built-in features like AWS Glue Data Quality for validation tasks within Glue ETL pipelines. This often requires less development and operational effort than building custom checks using other services or libraries.

A company stores customer records in Amazon S3. The company must not delete or modify the customer record data for 7 years after each record is created. The root user also must not have the ability to delete or modify the data.

A data engineer wants to use S3 Object Lock to secure the data.

Which solution will meet these requirements?

Enable governance mode on the S3 bucket. Use a default retention period of 7 years.

Place a legal hold on individual objects in the S3 bucket. Set the retention period to 7 years.

Set the retention period for individual objects in the S3 bucket to 7 years.

Enable compliance mode on the S3 bucket. Use a default retention period of 7 years.

The requirement is to ensure customer records in Amazon S3 cannot be deleted or modified for 7 years, even by the root user. Amazon S3 Object Lock provides two retention modes to achieve Write-Once-Read-Many (WORM) storage:

- Governance Mode: Protects objects from deletion and modification by most users. However, users with the

s3:BypassGovernanceRetentionpermission, including the root user, can override or remove the retention settings. - Compliance Mode: Provides a higher level of protection. Once an object version is locked in compliance mode, its retention mode cannot be changed, and its retention period cannot be shortened. Critically, no user, including the root user of the AWS account, can overwrite or delete an object version protected by compliance mode during its retention period.

A retention period specifies the length of time the object version remains locked. This period can be set explicitly for an object or automatically applied using a bucket's default retention settings.

To meet the requirement that even the root user cannot delete or modify the data for 7 years, Compliance Mode must be used. Setting a default retention period of 7 years on the bucket ensures that all new objects automatically inherit this lock configuration.

Placing a legal hold prevents deletion/modification but does not have a fixed duration; it remains until explicitly removed and doesn't automatically apply a 7-year term. Using governance mode does not satisfy the requirement of preventing deletion by the root user. Setting only the retention period without specifying compliance mode does not guarantee the required level of protection.

Therefore, enabling compliance mode on the bucket with a default 7-year retention period is the correct solution.

Pay close attention to the differences between S3 Object Lock's Governance and Compliance modes. Compliance mode offers stricter protection, preventing deletion even by the root user, which is often required for regulatory compliance scenarios like the one described.

A retail company is expanding its operations globally. The company needs to use Amazon QuickSight to accurately calculate currency exchange rates for financial reports. The company has an existing dashboard that includes a visual that is based on an analysis of a dataset that contains global currency values and exchange rates.

A data engineer needs to ensure that exchange rates are calculated with a precision of four decimal places. The calculations must be precomputed. The data engineer must materialize results in QuickSight super-fast, parallel, in-memory calculation engine (SPICE).

Which solution will meet these requirements?

Define and create the calculated field in the visual.

Define and create the calculated field in the dataset.

Define and create the calculated field in the analysis.

Define and create the calculated field in the dashboard.

Amazon QuickSight allows calculated fields to be created at different stages:

- Dataset Level: When you create a calculated field during dataset preparation (before publishing or saving), the calculation is performed as the data is ingested into SPICE. The results of this calculation are then stored within SPICE along with the original data. This meets the requirement for precomputing the calculation and materializing it in SPICE.

- Analysis/Visual/Dashboard Level: Calculated fields created within an analysis (and subsequently available in visuals and published dashboards) are computed dynamically when the analysis or dashboard is viewed or interacted with. These calculations are performed on the data retrieved from SPICE (or via Direct Query), but the results of the calculation itself are not stored back into SPICE.

To ensure the exchange rate calculations are precomputed and materialized in SPICE, the calculated field must be defined and created at the dataset level. This guarantees that the computation happens during data preparation/ingestion into SPICE, and the resulting values (with the desired precision) are stored efficiently within SPICE for fast retrieval during analysis.

Defining the calculated field in the visual, analysis, or dashboard would mean the calculation happens dynamically at query time, not meeting the requirement for precomputation and SPICE materialization.

Understand the difference between creating calculated fields at the dataset level versus the analysis level in QuickSight. Dataset-level calculations are precomputed and stored in SPICE (if used), improving performance for complex calculations and ensuring consistent results. Analysis-level calculations offer more flexibility during exploration but are computed dynamically.

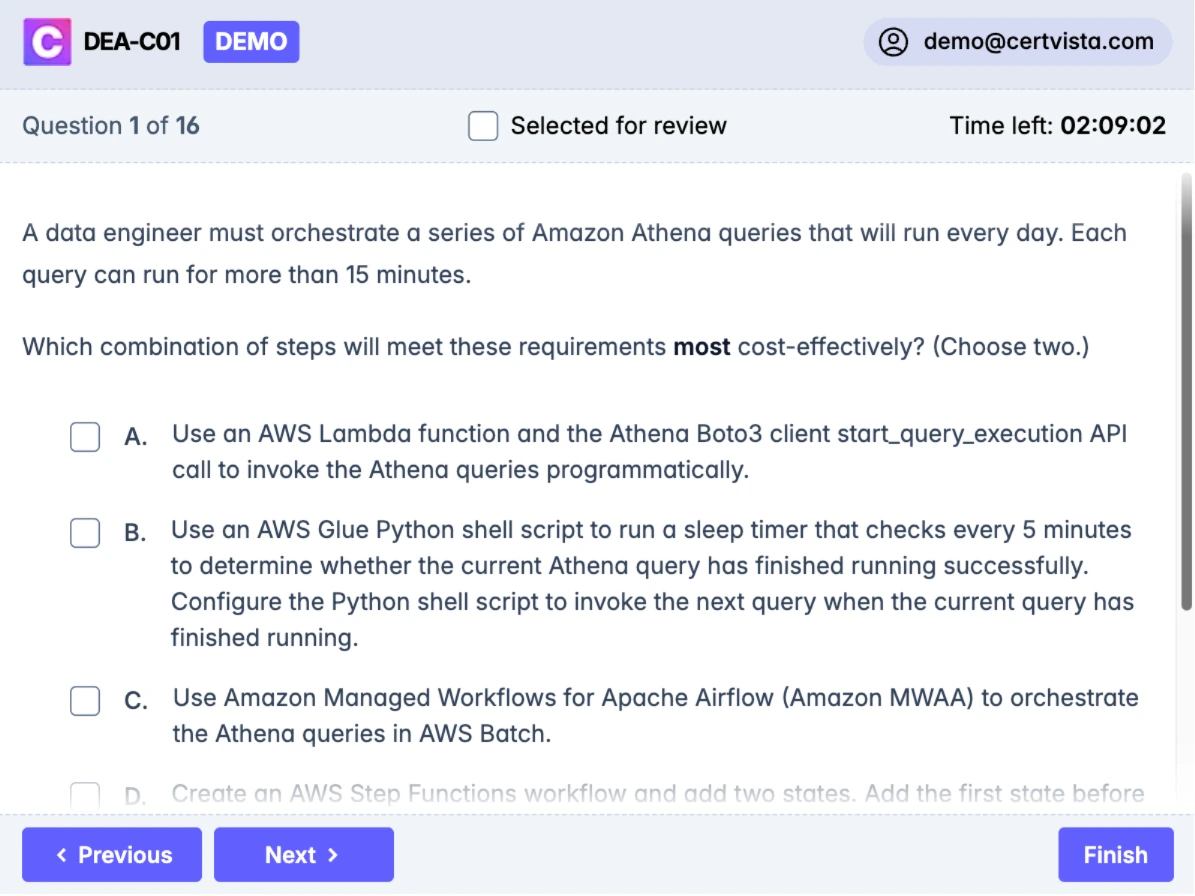

A data engineer must orchestrate a series of Amazon Athena queries that will run every day. Each query can run for more than 15 minutes.

Which combination of steps will meet these requirements most cost-effectively? (Choose two.)

Use an AWS Lambda function and the Athena Boto3 client start_query_execution API call to invoke the Athena queries programmatically.

Create an AWS Step Functions workflow and add two states. Add the first state before the Lambda function. Configure the second state as a Wait state to periodically check whether the Athena query has finished using the Athena Boto3 get_query_execution API call. Configure the workflow to invoke the next query when the current query has finished running.

Use Amazon Managed Workflows for Apache Airflow (Amazon MWAA) to orchestrate the Athena queries in AWS Batch.

Use an AWS Glue Python shell script to run a sleep timer that checks every 5 minutes to determine whether the current Athena query has finished running successfully. Configure the Python shell script to invoke the next query when the current query has finished running.

Use an AWS Glue Python shell job and the Athena Boto3 client start_query_execution API call to invoke the Athena queries programmatically.

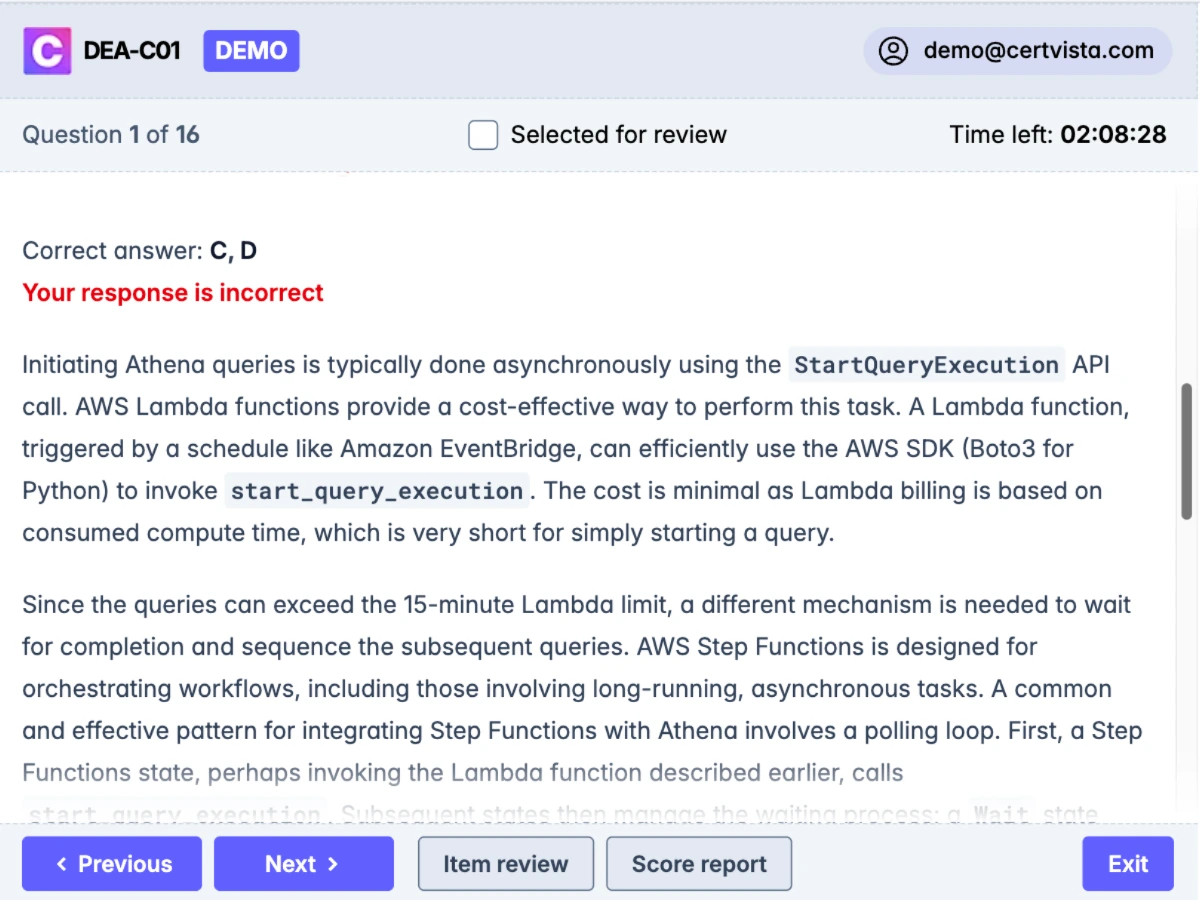

Initiating Athena queries is typically done asynchronously using the StartQueryExecution API call. AWS Lambda functions provide a cost-effective way to perform this task. A Lambda function, triggered by a schedule like Amazon EventBridge, can efficiently use the AWS SDK (Boto3 for Python) to invoke start_query_execution. The cost is minimal as Lambda billing is based on consumed compute time, which is very short for simply starting a query.

Since the queries can exceed the 15-minute Lambda limit, a different mechanism is needed to wait for completion and sequence the subsequent queries. AWS Step Functions is designed for orchestrating workflows, including those involving long-running, asynchronous tasks. A common and effective pattern for integrating Step Functions with Athena involves a polling loop. First, a Step Functions state, perhaps invoking the Lambda function described earlier, calls start_query_execution. Subsequent states then manage the waiting process: a Wait state introduces a pause, followed by another task state (again, potentially using Lambda) calling get_query_execution with the query ID to check its status (SUCCEEDED, FAILED, RUNNING). A Choice state evaluates the status, looping back to the Wait state if the query is still RUNNING, proceeding to the next query or step if SUCCEEDED, or triggering error handling if FAILED. This polling pattern allows Step Functions to manage the overall workflow across extended durations cost-effectively, with costs primarily associated with state transitions.

Combining these approaches creates a robust and cost-effective solution. An AWS Lambda function using the Athena Boto3 client's start_query_execution API call programmatically initiates each query. An AWS Step Functions workflow then orchestrates the sequence, using a polling loop with Wait states and tasks calling get_query_execution to ensure one query finishes before the next begins.

Using Amazon MWAA or AWS Batch would likely introduce higher baseline costs and operational complexity compared to this serverless approach for a daily batch process. Relying on AWS Glue Python shell jobs with manual sleep timers for polling is inefficient and not a recommended practice for robust orchestration.

For orchestrating asynchronous, long-running tasks on AWS (like Athena queries > 15 min, Glue jobs, Batch jobs), AWS Step Functions with a polling pattern (Wait state + task state checking status) is a standard, cost-effective, and serverless approach. Use Lambda functions for short tasks like initiating the job or checking its status.