AWS Certified Cloud Practitioner (CLF-C02)

- 826 exam-style questions

- Detailed explanations and references

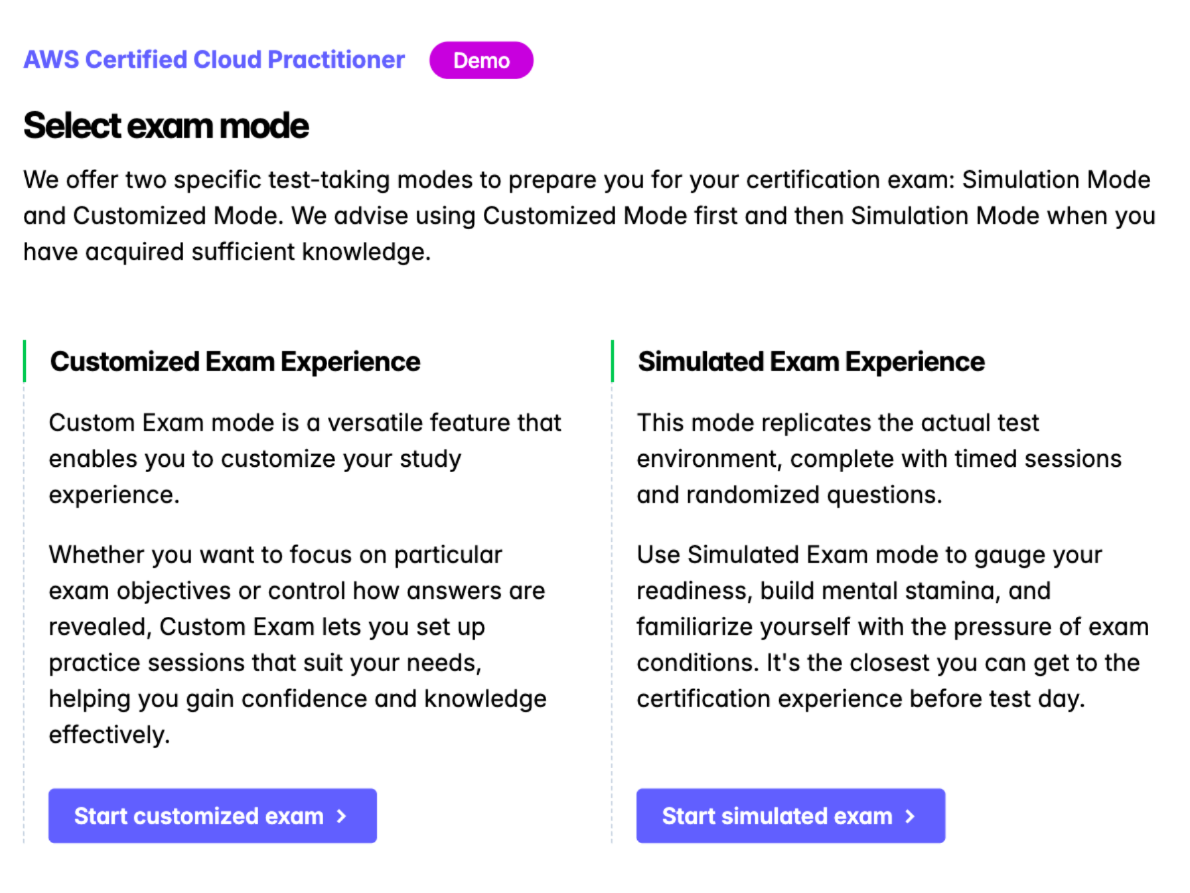

- Simulation and custom modes

- Custom exam settings to drill down into specific topics

- 180-day access period

- Pass or money back guarantee

What is in the package

The content, tone, and tenor of the questions mimic the actual exam. Along with the detailed explanations and the exam-taker tips, we have extensively referenced AWS documentation to get you up to speed on all domain areas tested for the CLF-C02 exam.

Please consider this course the final pit-stop so that you can cross the winning line with absolute confidence and get AWS Certified! Trust our process; you are in good hands.

Complete CLF-C02 domains coverage

Our practice exams fully align with the official AWS courseware and the Certified Cloud Practitioner exam objectives.

1. Cloud Concepts

CertVista CLF-C02 covers critical topics such as the services and categories of services provided by AWS. It also covers important information on how AWS can save your IT team large sums of money. This domain comprises 24% of the actual exam.

2. Security and Compliance

We cover security in general with AWS and provide details on implementing strong security with AWS services such as IAM and a wide variety of management tools. This domain makes up 30% of the real exam.

3. Cloud Technology and Services

This domain explores AWS's "nuts and bolts," including its global infrastructure and core services, such as compute and database services, storage, and AI/ML. It encompasses 34% of the exam.

4. Billing, Pricing, and Support

With CertVista CLF-C02, you'll learn about the tools and techniques for controlling costs inside AWS and the resources available to assist you. This domain accounts for 12% of the exam.

CertVista's CLF-C02 question bank contains hundreds exam-style questions that precisely mirror the AWS Certified Cloud Practitioner exam environment. Practice with multiple-choice, multiple-response, and scenario-based questions to familiarize yourself with every question type you'll face during certification. Our authentic testing environment ensures you'll approach your exam with confidence.

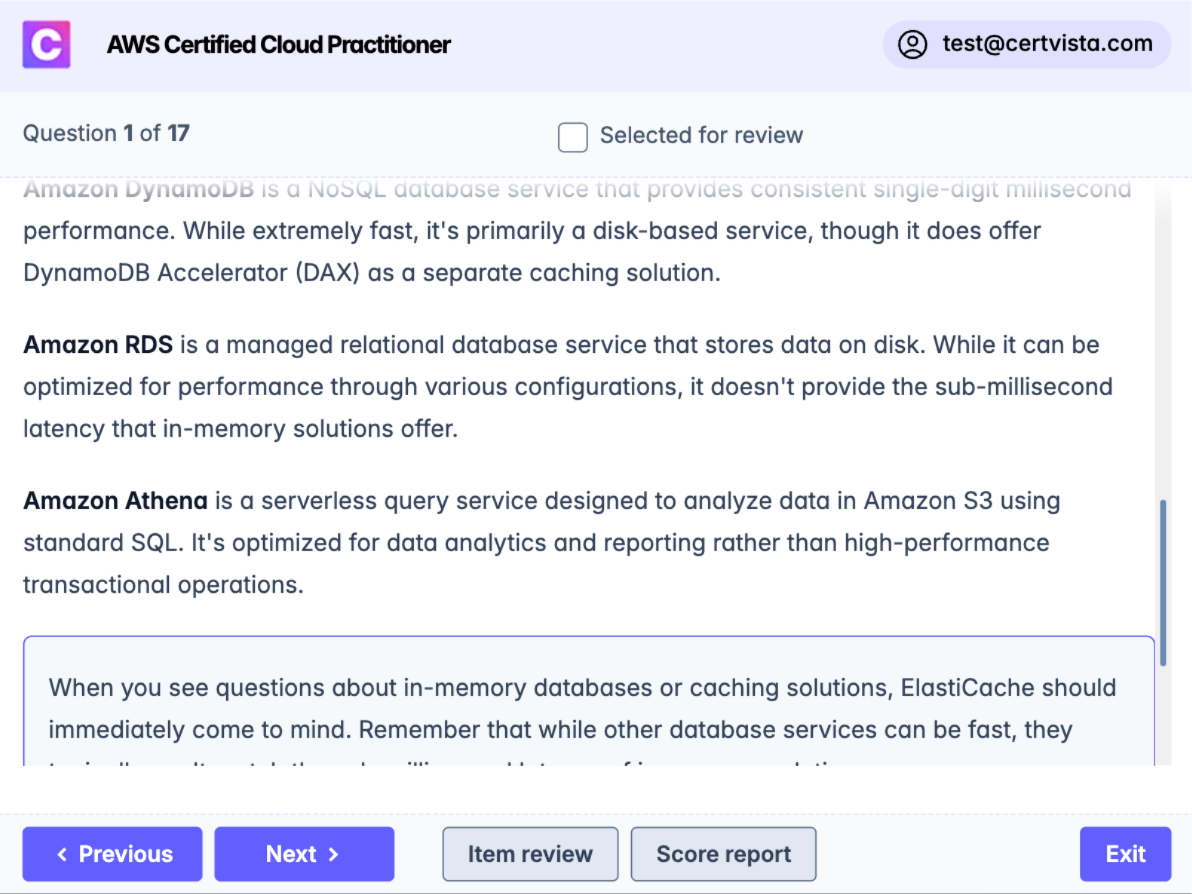

Every question includes comprehensive explanations that break down AWS concepts, services, and best practices. We explain the underlying AWS principles, reference official documentation, and clarify common misconceptions. This approach ensures you're prepared for the exam and real-world cloud implementation challenges.

CertVista offers two effective study modes: Custom Mode is for focused practice on specific AWS domains and is perfect for strengthening knowledge in targeted areas. Simulation Mode replicates the 90-minute exam environment with authentic time pressure and question distribution, building confidence and stamina.

Our analytics dashboard provides clear insights into your CLF-C02 exam preparation. Monitor performance across all exam domains, analyze your grasp of key AWS concepts, and identify knowledge gaps. These metrics help you create an efficient study strategy and know when you're ready for certification.

What's in the CLF-C02 exam

The AWS Certified Cloud Practitioner exam tests candidates’ overall understanding of the AWS Cloud and many of its critical services. This certification also serves to validate candidates’ knowledge with an industry-recognized credential.

Obtaining the AWS Certified Cloud Practitioner certification is a recommended path to achieving further specialty certifications such as AWS Certified AI Practitioner or AWS Certified Developer or can be a start toward other associate certifications in various disciplines, such as solutions architect and SysOps administrator.

The goals of the AWS Certified Cloud Practitioner program

To successfully pass the AWS CCP exam, a candidate will be expected to demonstrate the following:

- Explain the value of AWS Cloud services.

- Understand and be able to explain the AWS Shared Responsibility Model and how it would apply to their business or job.

- Understand and be able to explain security best practices for AWS accounts and management consoles.

- Understand how pricing, costs, and budgets are done within AWS, including the tools that AWS provides for monitoring and tracking them.

- Describe the core and popular AWS service offerings across the major network, compute, storage, databases, and development areas.

- Be able to recommend and justify which AWS core services would apply to real-world scenarios.

The CCP exam format

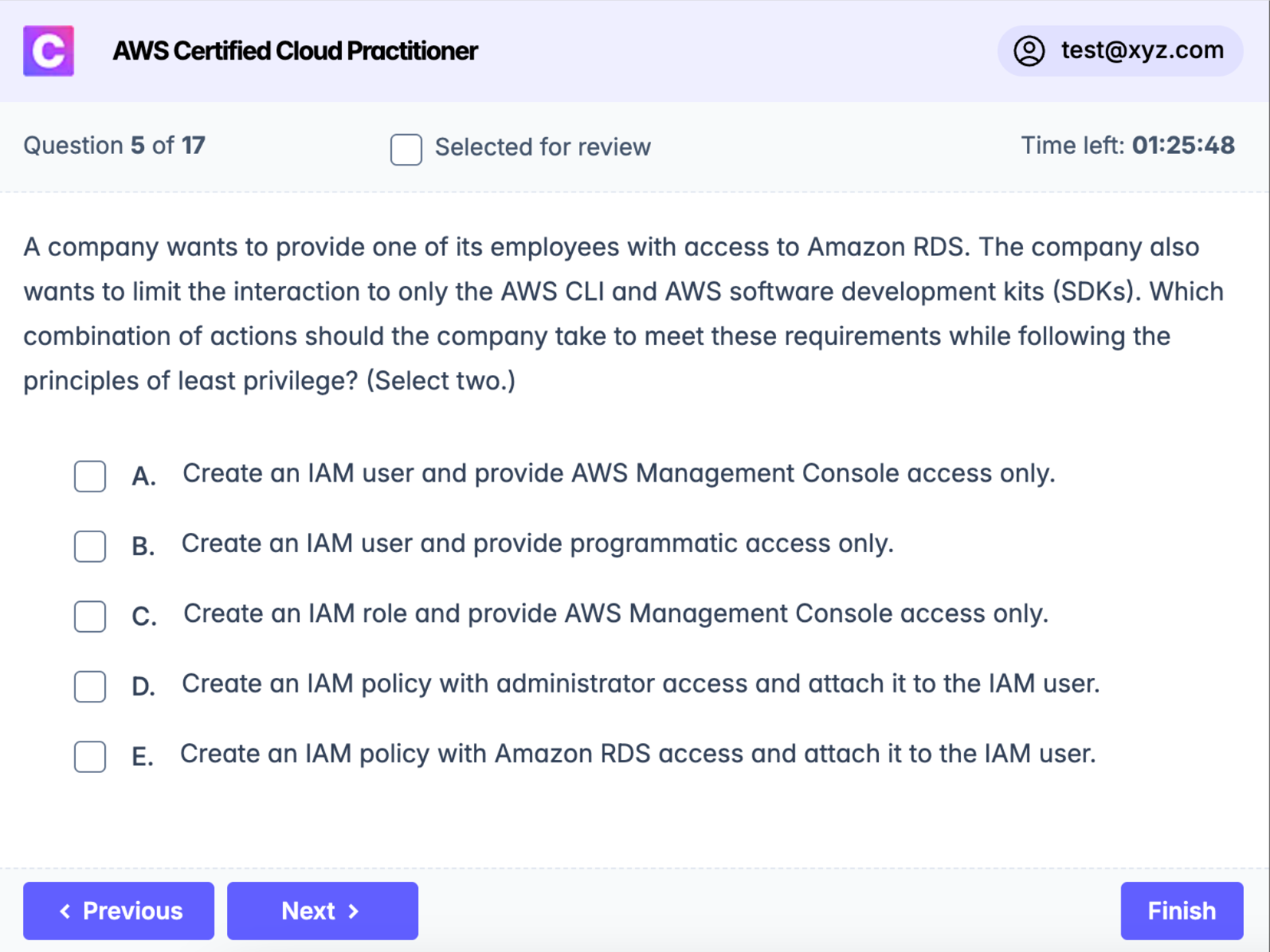

The AWS CCP exam consists of 65 questions and has a 90-minute time limit. Its current cost is $100 USD.

The exam consists of two types of questions: Multiple choice and Multiple response.

- Multiple choice: Each question has four possible answers, only one of which is correct.

- Multiple responses: Each question has five or more possible answers, two or more of which are correct.

In addition, the exam can contain unscored content. You will not know which questions fall into this category.

Once you have completed the exam, you will be given your results based upon a scoring of 100 to 1,000. You need a score of 700 to pass.

You will also receive a report that breaks down your performance on each section of the exam so you can see which areas you were strongest in and which need improvement. However, the overall exam is based on a pass/fail determination. You do not need to perform to a certain level in each section; you only need to reach a passing score overall. The number of questions from each section will generally follow the weighted distribution of content for the exam so that you will get more questions from some sections and fewer than others to reflect this.

Ideal Candidates

While CertVista provides you with the information required to pass this exam, Amazon considers ideal candidates to be those who possess the following:

- Six months of exposure to AWS Cloud design, implementation, and/or operations

- AWS knowledge in the following areas:

- AWS Cloud concepts

- Security and compliance in the AWS Cloud

- Core AWS services

- Economics of the AWS Cloud

The Exam Objectives

The AWS Certified Cloud Practitioner CLF-C02 exam has four major domains. The following table lists those domains and the percentage of the exam dedicated to each of them:

| Domain | Percentage of representation in exam |

|---|---|

| 1. Cloud Concepts | 24% |

| 2. Security and Compliance | 30% |

| 3. Cloud Technology and Services | 34% |

| 4. Billing, Pricing, and Support | 12% |

Sample CLF-C02 questions

Get a taste of the AWS Certified Cloud Practitioner exam with our carefully curated sample questions below. These questions mirror the actual CLF-C02 exam's style, complexity, and subject matter, giving you a realistic preview of what to expect. Each question comes with comprehensive explanations, relevant documentation references, and valuable test-taking strategies from our expert instructors.

While these sample questions provide excellent study material, we encourage you to try our free demo for the complete CLF-C02 exam preparation experience. The demo features our state-of-the-art test engine that simulates the real exam environment, helping you build confidence and familiarity with the exam format. You'll experience timed testing, question marking, and review capabilities – just like the actual certification exam.

What is the correct statement about AWS Shield Advanced pricing and DDoS protection costs?

AWS Shield Advanced offers protection against higher fees that could result from a DDoS attack

AWS Shield Advanced is a free service for AWS Business Support plan

AWS Shield Advanced is a free service for all AWS Support plans

AWS Shield Advanced is a free service for AWS Enterprise Support plan

AWS Shield Advanced includes DDoS cost protection as one of its key features. AWS will credit you for the associated fees if your AWS resources experience increased usage due to a DDoS attack. This protection explicitly covers potential spikes in your AWS bill that result from increased EC2, ELB, CloudFront, and Route 53 usage during a verified DDoS attack.

AWS Shield Advanced is not a free service for any support plan level. It's a paid service requiring a separate subscription and a significant annual commitment. The service costs $3,000 per month with a 12-month commitment, plus additional data transfer usage fees.

The misconception about AWS Shield Advanced being free likely stems from confusion with AWS Shield Standard, which is included at no additional cost for all AWS customers. Shield Standard provides basic DDoS protection against common layer 3 and layer 4 attacks.

Business Support plan customers do not receive AWS Shield Advanced for free. While the Business Support plan includes many benefits, Shield Advanced requires a separate payment.

Enterprise Support plan customers also need to pay separately for AWS Shield Advanced. While Enterprise Support provides extensive benefits, it doesn't include Shield Advanced as a free service.

Don't confuse AWS Shield Standard (free) with AWS Shield Advanced (paid). When you see questions about Shield Advanced pricing, remember it's always a paid service with DDoS cost protection as a key benefit.

Reference:

For a company looking to store long-term archival data that doesn't require immediate access, which Amazon S3 storage class would be the most appropriate and cost-effective choice?

Amazon S3 Intelligent-Tiering

Amazon S3 Standard

Amazon S3 Glacier Flexible Retrieval

Amazon S3 One Zone-Infrequent Access (S3 One Zone-IA)

Amazon S3 Glacier Flexible Retrieval is specifically designed for data archiving, offering the lowest storage costs in the S3 family for long-term data retention. This storage class is ideal for data that needs to be preserved for years but doesn't require frequent or immediate access. Data retrieval typically takes several hours, which is acceptable for archival use cases.

Let's examine why the other options aren't optimal for archival storage:

S3 Standard is designed for frequently accessed data with high availability and durability. While it offers immediate access, it comes at a higher cost, making it unsuitable for long-term archival storage where immediate access isn't required.

S3 Intelligent-Tiering automatically moves data between access tiers based on usage patterns. While this is great for data with changing access patterns, it's not cost-optimal for archival data that will rarely, if ever, be accessed.

S3 One Zone-IA stores data in a single Availability Zone at a lower cost than Standard storage. However, it's designed for infrequently accessed data that can be recreated, not critical archival data that needs long-term durability across multiple Availability Zones.

When considering storage classes for archival data, remember that the key trade-off is usually between access speed and cost. The longer retrieval time of Glacier Flexible Retrieval is balanced by its significantly lower storage costs, making it ideal for archival purposes.

References:

Which perspective is part of the AWS Cloud Adoption Framework (AWS CAF)?

Process

Architecture

Business

Product

The Business perspective is indeed one of the six core perspectives of the AWS Cloud Adoption Framework (CAF). The AWS CAF is designed to help organizations develop efficient plans for their cloud adoption journey.

The Business perspective focuses on ensuring that IT aligns with business needs and that IT investments link to key business results. It helps business managers develop a strong business case for cloud adoption and ensures that the business strategies and goals align with the cloud initiatives. Senior leadership, finance managers, budget owners, and strategy stakeholders typically drive this perspective.

The other options mentioned are not official perspectives of the AWS CAF. To provide complete context, the six actual perspectives of AWS CAF are:

- Business

- People

- Governance

- Platform

- Security

- Operations

While important in cloud adoption, Process is not a dedicated CAF perspective. Instead, processes are considered from multiple perspectives, particularly Operations and Governance.

Architecture is a component that falls under the Platform perspective rather than being a separate perspective itself.

Product is not a CAF perspective, though product development strategies might be considered within the Business perspective.

Remember the six core perspectives and their primary purposes when studying the AWS CAF rather than getting distracted by related but incorrect terms.

Reference:

A company has recently migrated to AWS Cloud and needs to receive detailed hourly cost breakdowns delivered to an S3 bucket. Which AWS service provides this capability?

AWS Cost Explorer

AWS Cost & Usage Report (AWS CUR)

AWS Pricing Calculator

AWS Budgets

A company has recently migrated to AWS Cloud and needs to receive detailed hourly cost breakdowns delivered to an S3 bucket. Which AWS service provides this capability?

Options:

- AWS Cost Explorer

- [✓] AWS Cost & Usage Report (AWS CUR)

- AWS Pricing Calculator

- AWS Budgets

The AWS Cost and Usage Report (AWS CUR) service is designed for this scenario as it provides the most comprehensive set of AWS cost and usage data. CUR can generate highly detailed reports down to the hourly level and deliver them directly to an S3 bucket - precisely what the company needs.

What makes AWS CUR the perfect solution is its ability to provide the most granular cost data available, breaking down charges by the hour and including resource-level usage details. The service automatically delivers these comprehensive reports to an S3 bucket on a schedule that works for you - hourly, daily, or monthly. For companies that need advanced analysis capabilities, CUR seamlessly integrates with Amazon Athena and Amazon Redshift, allowing for sophisticated data analysis and custom reporting.

While valuable for interactive cost analysis and providing excellent visualizations, AWS Cost Explorer doesn't offer the automatic report generation and S3 delivery functionality needed in this scenario. It's better suited for hands-on exploration of cost trends and patterns.

The AWS Pricing Calculator service serves a different purpose entirely - it's a planning tool used before deployment to estimate future AWS costs. It helps model different scenarios but doesn't deal with actual usage data or report generation.

AWS Budgets focuses on proactive cost control through threshold setting and alerts. While this is crucial for financial management, it doesn't provide the detailed historical usage reporting capability the company requires.

When you encounter questions about detailed cost reporting in AWS, remember that AWS CUR stands out when detailed historical analysis and S3 delivery are mentioned in the requirements. The other cost management tools serve important but distinct purposes in the AWS ecosystem.

References:

When you need to configure Amazon Route 53 to automatically redirect traffic from a primary site to a backup site in case of failure, which routing policy should you choose?

Latency-based routing

Simple routing

Weighted routing

Failover routing

The Failover routing policy is specifically designed for active-passive configurations, where you want your primary site to handle all traffic under normal conditions but need automatic redirection to a backup site if the primary site fails. Route 53 accomplishes this by monitoring the health of your primary site and quickly redirecting traffic when issues are detected.

Here's how it works:

- Route 53 performs health checks on your primary endpoint.

- Route 53 automatically routes traffic to the secondary (passive) endpoint if the health check fails.

- Once the primary endpoint returns to a healthy state, traffic is routed back to it.

Let's examine why the other options aren't suitable for active-passive configurations:

Latency-based routing directs users to the region that provides the lowest latency. While useful for optimizing user experience, it doesn't provide the failover functionality needed for active-passive setups.

Simple routing sends traffic to a single resource or distributes it randomly among multiple resources. It doesn't support health checks or failover capabilities.

Weighted routing lets you distribute traffic across multiple resources based on assigned weights. While you could assign different weights to primary and secondary resources, it doesn't provide the automatic failover functionality needed for true active-passive configurations.

When you see "active-passive" in a Route 53 question, think of failover routing. The key concept is having a primary resource handling traffic with a backup ready to take over if needed.

Reference:

Which of the following elements is considered part of the AWS Global Infrastructure?

Virtual Private Network (VPN)

AWS Region

Virtual Private Cloud (VPC)

Subnet

AWS Region is a fundamental component of AWS Global Infrastructure, which consists of Regions, Availability Zones, and Edge Locations distributed worldwide to provide reliable and low-latency cloud services.

Here's why AWS Region is the correct answer:

- A Region is a physical location where AWS clusters data centers (called Availability Zones)

- Each Region is completely independent and isolated from other Regions

- Regions allow customers to deploy applications closer to their end users for better performance

- They enable data sovereignty compliance by keeping data within specific geographic boundaries

- As of 2024, AWS operates dozens of Regions globally, with more being added regularly

The other options are logical networking constructs, not physical infrastructure:

Virtual Private Network (VPN) is a networking service that creates a secure connection between your network and AWS. While it's important for connectivity, it's a service running on top of the global infrastructure, not a part of it.

Virtual Private Cloud (VPC) is a logically isolated section of the AWS cloud where you launch your resources. It's a networking service that operates within a Region, making it a service that runs on the global infrastructure rather than being part of it.

Subnet is a segment of a VPC's IP address range where you place AWS resources. Like VPC, it's a networking construct that helps organize resources within a Region, not a physical infrastructure component.

When tackling questions about AWS Global Infrastructure, focus on the physical components (Regions, Availability Zones, Edge Locations) rather than the logical or virtual services that run on top of this infrastructure.

Reference:

How would you best describe an AWS Availability Zone in terms of its physical infrastructure?

One or more server racks in the same location

One or more data centers in multiple locations

One or more server racks in multiple locations

One or more data centers in the same location

An Availability Zone consists of one or more data centers located within close geographical proximity to each other. Think of it like a neighborhood where several data centers are clustered together, all connected by high-speed, low-latency networks. While physically separate, these data centers work together as a single unit to provide redundant power, networking, and connectivity.

This design is crucial for AWS's high-availability architecture. By having multiple data centers in the same location forming an AZ, AWS can ensure continuous operation even if one data center experiences issues. For example, if one data center loses power, the others within the same AZ can continue serving customer workloads without interruption.

Let's understand why the other descriptions aren't accurate:

Having just server racks in the same location would be too small in scale to provide the robust infrastructure needed for an AZ. Server racks alone couldn't deliver the level of redundancy and fault tolerance that AWS customers require.

Having data centers or server racks spread across multiple locations would describe a Region rather than an AZ. AZs are specifically designed to be in close proximity to minimize latency while maintaining enough distance to avoid shared points of failure.

When thinking about AWS infrastructure, remember the hierarchy: Regions contain multiple AZs, and AZs contain multiple data centers. This helps you understand how AWS achieves its remarkable reliability and availability.

To visualize this, imagine a city (Region) with several neighborhoods (AZs), each containing multiple buildings (data centers). While the neighborhoods are close enough for quick communication, they're far enough apart to be protected from localized issues like power outages or natural disasters.

Reference:

When designing a Virtual Private Cloud (VPC) in AWS, which two core networking components are essential parts of its architecture?

AWS Storage Gateway

API Gateway

Subnet

Object

Internet Gateway

Subnets and Internet Gateways are fundamental building blocks of an Amazon VPC, working together to create a secure and functional network architecture in the cloud. Let me explain how these components work together to form your virtual network.

A subnet is like a smaller network segment within your VPC. Just as you might divide a physical office building into different departments with their own security requirements, subnets allow you to segment your VPC into smaller networks. For example, you might create public subnets for web servers needing internet access and private subnets for databases that should remain isolated. Each subnet exists within a single Availability Zone, helping you build highly available applications.

An Internet Gateway serves as your VPC's connection to the Internet – think of it as the front door of your cloud network. Without an Internet Gateway, resources in your public subnets can't communicate with the Internet, much like a building without an entrance. When you attach an Internet Gateway to your VPC and configure your routing properly, resources in your public subnets can access the Internet, and Internet-based clients can access your public resources.

Now, let's understand why the other components aren't part of a VPC's core architecture:

AWS Storage Gateway is a hybrid storage service that connects on-premises environments with cloud storage. While it can work with resources in a VPC, it's not a VPC component itself.

API Gateway is a service for creating and managing APIs. Though it can integrate with VPC resources, it exists as a separate service outside your VPC.

Objects typically refer to items stored in Amazon S3 and have no direct relationship with VPC architecture.

When studying VPC concepts, think about networking in layers. Start with the VPC itself, then understand how subnets segment the network, and finally how components like Internet Gateways connect your VPC to external networks. This layered approach will help you understand how all the pieces fit together.

Reference:

A business needs help identifying suitable AWS services as they plan their migration from on-premises to AWS Cloud. As a Cloud Practitioner, which two options would you recommend?

AWS CloudTrail

AWS Organizations

Amazon CloudWatch

AWS Partner Network (APN)

AWS Service Catalog

AWS Partner Network (APN) provides access to thousands of professional AWS partners who can offer expert guidance during cloud migration. These partners have deep AWS expertise and can help organizations evaluate their current infrastructure, recommend suitable AWS services, and plan migration strategies. APN partners often have experience with similar migrations and can share best practices and proven architectures.

AWS Service Catalog allows organizations to create and manage catalogs of approved AWS services for use within their company. IT administrators can create pre-configured service offerings that comply with organizational standards, making it easier for teams to identify and deploy appropriate AWS services. This helps maintain consistency and compliance while accelerating the adoption of AWS services across the organization.

AWS CloudTrail is a service for governance, compliance, and operational auditing of your AWS account. While valuable for security and compliance, it doesn't help identify which AWS services to use. CloudTrail records API activity after you're already using AWS services, but it doesn't provide guidance on service selection.

AWS Organizations helps you centrally manage and govern multiple AWS accounts. While it's useful for managing accounts at scale, it doesn't provide guidance on which AWS services to use. Its primary purpose is to consolidate billing and apply policies across accounts.

Amazon CloudWatch is a monitoring and observability service for AWS resources and applications. It collects operational data and metrics from resources you're already using but doesn't help identify which services to use during migration planning.

When considering migration to AWS, remember that AWS provides human expertise (through APN) and technical tools (like Service Catalog) to help organizations make informed decisions. Questions about migration planning often focus on these strategic resources rather than operational tools.

References:

When managing IAM, which practices are considered AWS best practices for security? (Select two.)

Grant maximum privileges to avoid assigning privileges again

Create a minimum number of accounts and share these account credentials among employees

Enable multi-factor authentication (MFA) for all users

Rotate credentials regularly

Share AWS account root user access keys with other administrators

The correct answers are:

- Enable multi-factor authentication (MFA) for all users

- Rotate credentials regularly

Let's first understand why these two practices are crucial for AWS security:

Enabling MFA for all users is a fundamental security practice that AWS strongly recommends. MFA adds an essential second layer of protection beyond just passwords. Users need their password and a temporary code from their MFA device when they log in. This significantly reduces the risk of account compromise even if passwords are exposed. For example, even if a malicious actor obtains an employee's password through phishing, they still can't access the account without the MFA device.

Regular credential rotation is another critical security practice. By changing access keys and passwords periodically, you limit the damage that could occur if credentials are compromised. AWS recommends rotating credentials every 90 days. This includes IAM user access keys, console passwords, and any other authentication credentials.

Now, let's examine why the other options are incorrect and potentially dangerous:

Granting maximum privileges violates the least privilege principle, a cornerstone of AWS security. Users should only have the minimum permissions needed to perform their tasks. For instance, if a developer only needs to work with EC2 instances, they shouldn't have access to database or billing services.

Creating minimal accounts and sharing credentials among employees is a serious security risk. Each user must have their own unique IAM user account to maintain accountability and auditability. Shared credentials make it impossible to track who performed what actions and complicate access revocation when employees leave.

Sharing root user access keys with other administrators is extremely dangerous. The root user has unrestricted access to all AWS services and resources. AWS explicitly recommends never sharing root credentials and instead creating individual IAM admin users with appropriate permissions.

Questions about IAM best practices often focus on security principles like least privilege, individual accountability, and multiple layers of protection. When you see options about sharing credentials or granting excessive permissions, these are typically incorrect.

Reference:

Frequently Asked Questions

Yes, absolutely. The CLF-C02 exam is deceptively broad, requiring you to quickly differentiate between dozens of AWS services, cloud concepts, and pricing models across a wide range of real-world scenarios—a common stumbling block for first-time candidates. From our experience, CertVista's practice exams are the most effective tool to master this skill. Our realistic test engine simulates the actual exam environment, moving you beyond simple memorization to train the critical ability of applying cloud knowledge correctly under pressure, which is the key to passing the AWS Certified Cloud Practitioner exam.

Based on performance data from thousands of successful users, the clear benchmark for success is consistently scoring 85% or higher across multiple CertVista practice exams. Achieving this score indicates you have a firm grasp of cloud computing fundamentals, core AWS services, security principles, and pricing models, and can apply them effectively under timed conditions. It proves you are truly ready and strongly correlates with a first-time pass on the official CLF-C02 exam.

CertVista provides a professional preparation tool where free quizzes fall short. The key differences are our realism, with questions that meticulously mirror the CLF-C02's scenario-based format, and our in-depth explanations for every answer choice—correct and incorrect—which transforms practice into a powerful learning tool. We also guarantee 100% coverage of all official CLF-C02 exam domains, including cloud concepts, security and compliance, billing and pricing, and core AWS technology, and provide performance analytics to pinpoint your weak areas, ensuring your study time is as efficient as possible.

The single biggest mistake we see is candidates confusing the specific use cases for similar AWS services (e.g., AWS Shield vs. AWS WAF, or Amazon CloudWatch vs. AWS CloudTrail). CertVista's practice exams are specifically engineered to solve this problem. We immerse you in realistic, scenario-based questions that force you to make these critical distinctions repeatedly until the unique purpose of each service becomes second nature, eliminating the confusion that causes many CLF-C02 candidates to fail.

The CLF-C02 exam primarily uses multiple-choice and the more challenging multiple-response formats, both of which are extensively covered in our CertVista test engine. While other formats may appear, our preparation methodology inherently prepares you for them. By focusing on the relationships between AWS services, cloud architecture concepts, and security and billing best practices, our scenario-based questions and detailed explanations build a core competency that allows you to analyze and correctly answer any question format AWS might present on the Cloud Practitioner exam.