AWS Certified AI Practitioner (AIF-C01)

- 470 exam-style questions

- Detailed explanations and references

- Simulation and custom modes

- Custom exam settings to drill down into specific topics

- 180-day access period

- Pass or money back guarantee

What is in the package

CertVista AIF-C01 content, tone, and depth precisely mirror the questions in the AWS Certified AI Practitioner (AIF-C01) exam. Our comprehensive materials include detailed explanations and practical exam-taker tips, thoroughly referencing AWS documentation to prepare you for all domain areas of the AI Practitioner certification.

Please consider this course the final pit-stop so you can cross the winning line with absolute confidence and get AWS AI Certified! Trust our process; you are in good hands.

Complete AIF-C01 domains coverage

Our practice exams fully align with the official AWS courseware and the Certified AI Practitioner exam objectives.

1. Fundamentals of AI and ML

Covers core AI/ML concepts, terminology, and practical applications. This domain focuses on understanding basic AI concepts, identifying suitable use cases, and comprehending the ML development lifecycle. It includes knowledge of different learning types, data types, and AWS's managed AI services. The domain also emphasizes understanding MLOps concepts and model performance evaluation.

2. Fundamentals of Generative AI

Focuses on generative AI essentials, including foundational concepts like tokens, embeddings, and prompt engineering. This domain explores use cases for generative AI, its lifecycle, capabilities, and limitations. It also covers AWS's infrastructure and technologies for building generative AI applications, including cost considerations and service selection.

3. Applications of Foundation Models

Explores the practical aspects of working with foundation models, including design considerations, prompt engineering techniques, and model customization. This domain covers model selection criteria, Retrieval Augmented Generation (RAG), training processes, and performance evaluation methods. It emphasizes understanding both technical implementation and business value assessment.

4: Guidelines for Responsible AI

Addresses the ethical and responsible development of AI systems. This domain covers important aspects like bias, fairness, inclusivity, and transparency in AI systems. It includes understanding tools for responsible AI development, recognizing legal risks, and implementing practices for transparent and explainable AI models.

5. Security, Compliance, and Governance for AI Solutions

Focuses on securing AI systems and ensuring regulatory compliance. This domain covers AWS security services, data governance strategies, and compliance standards specific to AI systems. It includes understanding security best practices, privacy considerations, and governance protocols for AI implementations.

CertVista's AI Practitioner question bank contains hundreds of exam-style questions that accurately replicate the certification exam environment. Practice with diverse question types, including multiple-choice, multiple-response, and scenario-based questions focused on real-world AI implementation challenges. CertVista exam engine will familiarize you with the real exam environment so you can confidently approach your certification.

Each CertVista question comes with a detailed explanation and references. The explanation outlines the underlying AI principles, references official AWS documentation, and clarifies common misconceptions. You'll learn why the correct answer satisfies the scenario presented in the question and why the other options do not.

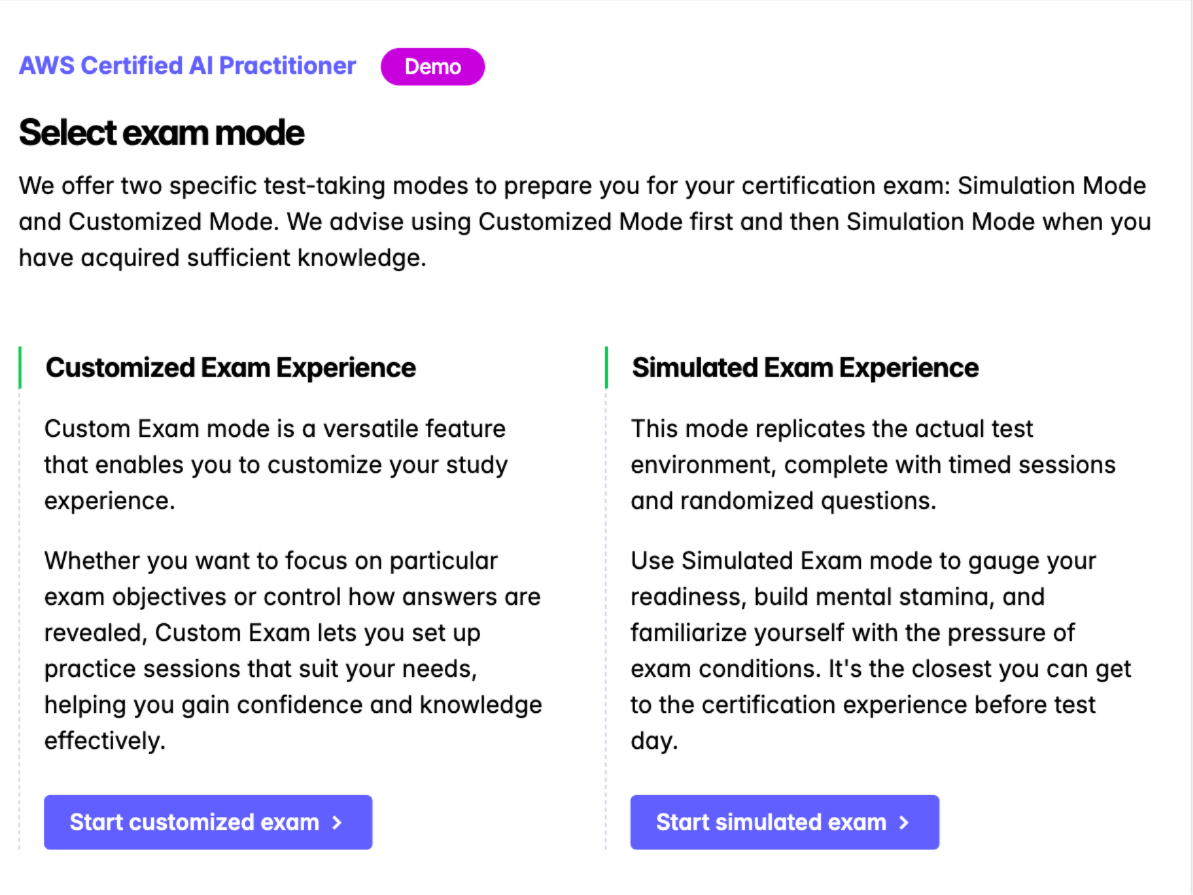

CertVista offers two effective study modes: Custom Mode is for focused practice on specific AWS domains and is perfect for strengthening knowledge in targeted areas. Simulation Mode replicates the 90-minute exam environment with authentic time pressure and question distribution, building confidence and stamina.

The CertVista analytics dashboard helps you gain clear insights into your AWS exam preparation. You can monitor your performance across all exam domains and identify knowledge gaps. This will help you create an efficient study strategy and know when you're ready for certification.

What's in the AIF-C01 exam

Navigating the landscape of artificial intelligence and machine learning certifications can be daunting. As a leading provider of certification preparation materials, we've seen many professionals struggle to identify the right starting point. The AWS Certified AI Practitioner (AIF-C01) exam is designed to be that entry point, validating a candidate's foundational knowledge of AI and ML concepts and their application within the AWS ecosystem.

This comprehensive guide breaks down everything you need to know about the AIF-C01 exam, drawing from our extensive analysis of the exam structure, content, and feedback from successful candidates.

What is the AWS AIF-C01 exam?

The AWS Certified AI Practitioner (AIF-C01) exam is a foundational-level certification test that validates an individual's understanding of artificial intelligence (AI), machine learning (ML), and generative AI concepts and their use cases on the Amazon Web Services (AWS) platform. It is designed for individuals in various roles, including IT support, sales, business analysis, and project management, who must demonstrate a broad understanding of AI/ML technologies without necessarily having deep technical or coding expertise.

Who should take the AIF-C01 exam?

This exam is ideal for individuals beginning their journey into AI and machine learning or those in non-technical roles who want to understand the business applications of AI on AWS. While there are no strict prerequisites, AWS recommends that candidates have up to six months of exposure to AI/ML technologies on AWS. A basic understanding of core AWS services is also beneficial. From our experience, professionals already holding the AWS Certified Cloud Practitioner certification will find it a logical next step.

What are the key details of the AIF-C01 exam?

Here is a summary of the essential information for the AIF-C01 exam as of early 2025.

| Exam Detail | Description |

|---|---|

| Exam Name | AWS Certified AI Practitioner |

| Exam Code | AIF-C01 |

| Number of Questions | 65 questions (50 scored, 15 unscored) |

| Time Allotted | 90 minutes |

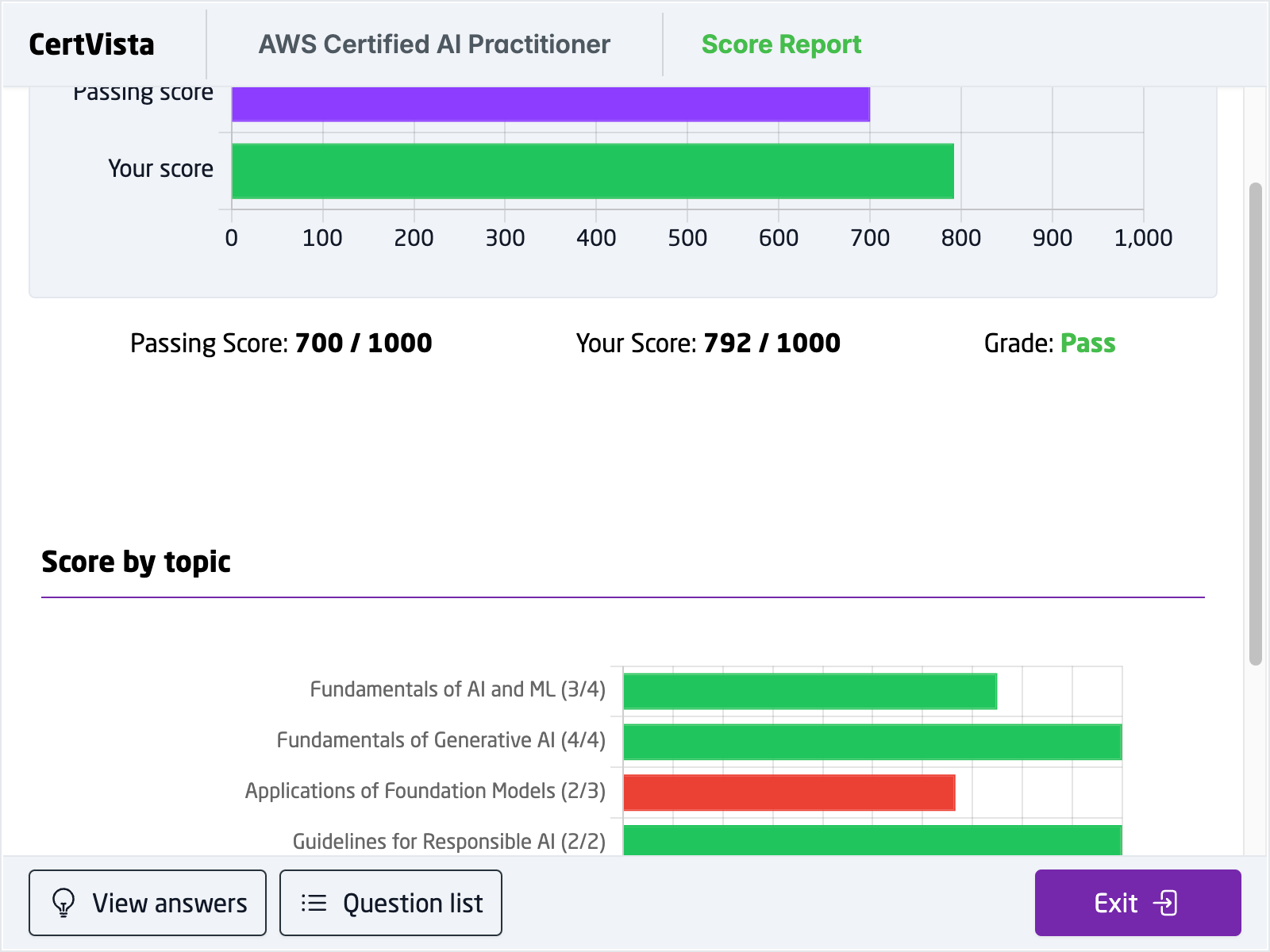

| Passing Score | 700 out of a possible 1,000 |

| Exam Cost | $100 USD (plus applicable taxes) |

| Question Formats | Multiple choice, multiple response, ordering, and matching |

| Delivery Method | Pearson VUE testing center or online proctored exam |

What are the domains covered in the AIF-C01 exam?

The AIF-C01 exam is structured around five key domains, each with a specific weighting that indicates its importance on the test. A common mistake we see is candidates focusing too heavily on one domain while neglecting others. A balanced study approach is crucial for success.

- Domain 1: Fundamentals of AI and ML (20%): This domain covers the basic concepts and terminology of AI, ML, deep learning, and neural networks.

- Domain 2: Fundamentals of Generative AI (24%): This section focuses on the basics of generative AI, including techniques for creating new content.

- Domain 3: Applications of Foundation Models (28%): This is the largest domain and examines the practical applications of large language models and other foundation models.

- Domain 4: Guidelines for Responsible AI (14%): This domain highlights the ethical considerations and best practices for deploying AI solutions responsibly, including fairness and transparency.

- Domain 5: Security, Compliance, and Governance for AI Solutions (14%): This section covers the security measures, compliance requirements, and governance practices for managing AI solutions.

What types of questions are on the CLF-C01 exam?

The CLF-C01 exam primarily uses two question formats, but it's important to be prepared for any style that tests your knowledge of relationships between concepts.

- Multiple-choice: This is the most common format. You are presented with a question and four possible answers and must select the best option. These questions test your knowledge of a service, concept, or best practice.

- Multiple Response: This format is more challenging. You'll see a question with five or more possible answers and be asked to choose two or more correct options. A common mistake here is not realizing there is no partial credit; you must select all the correct answers to get the point. These questions test a deeper understanding and your ability to identify multiple valid solution components.

What about other question types like Matching or Ordering?

While less common on the current CLF-C01 exam, AWS can incorporate different question styles to test your understanding.

Matching Questions

These would require matching items from one list to another (e.g., matching an AWS service to its correct use case).

To answer a matching question, you will match list responses to one or more prompts provided in the question. Each question will include a list of 3-7 responses. The directions in the question will state the number of responses to match and if each response should be selected from each dropdown list once or more than once. You must correctly match all prompts with a response to earn credit for the question.

Ordering Questions

These would ask you to arrange items in the correct sequence (e.g., the steps to create a specific resource).

To answer an ordering question, you will place a list of responses in the order specified in the question. Each question will include a list of 3–5 responses and state how to order the responses. The directions will state if each response should be selected from the dropdown lists once or if some responses might not be selected. If some responses might not be selected, there are distractors in the list of responses. The directions will also state the total number of responses to order. You must correctly order all responses to earn credit for the question.

The following video presents an example of an AIF-C01 ordering question:

Even if you don't encounter these specific formats, the underlying skills they test are crucial. They measure your ability to understand relationships, sequences, and categories. This is where CertVista's study methodology excels. Our scenario-based questions and in-depth explanations don't just teach you isolated facts. They train you to understand the relationships between problems and solutions, between services and their features, and between different pricing models. This core competency, built through our practice exams, ensures you are prepared for the logic behind any question format AWS might use.

How should I prepare for the AIF-C01 exam?

A structured study plan is essential for passing the AIF-C01 exam. From our experience helping thousands of candidates, we recommend the following approach:

- Understand the Exam Blueprint: Thoroughly review the official AWS AIF-C01 exam guide to understand the domains and their weightings.

- Gain Foundational Knowledge: If you are new to AWS, start with foundational courses like the AWS Cloud Practitioner Essentials.

- Get Hands-On Experience: While not a developer-focused exam, getting hands-on experience with AWS AI/ML services through the AWS Free Tier can be highly beneficial. Experimenting with services like Amazon SageMaker, Amazon Bedrock, and Amazon Q will solidify your understanding.

- Practice with Realistic Questions: This is a critical step. At CertVista, we provide hundreds of exam-style questions on a highly realistic test engine to simulate the actual exam environment. Aim for consistent scores of 85% or higher on practice tests.

What key AWS services should I focus on?

While the AIF-C01 exam is conceptual, familiarity with key AWS AI/ML services is necessary. Based on our analysis of the exam content, you should have a solid understanding of the following:

- Core AI/ML Services: Amazon SageMaker, Amazon Bedrock, and Amazon Q are central to the exam.

- Applied AI Services: Be familiar with services like Amazon Rekognition for image and video analysis, Amazon Polly for text-to-speech, Amazon Lex for building chatbots, and Amazon Comprehend for natural language processing.

- Generative AI Tools: Understand Amazon Bedrock's capabilities for working with foundation models and the importance of prompt engineering.

How does the AIF-C01 compare to other AI certifications?

When considering an AI certification, it's helpful to understand how the AWS AIF-C01 compares to similar offerings from other major cloud providers and organizations.

| Certification | Provider | Focus Area | Target Audience | Our Take |

|---|---|---|---|---|

| AWS Certified AI Practitioner (AIF-C01) | AWS | Foundational concepts of AI, ML, and Generative AI on the AWS platform. | Beginners and non-technical professionals. | An excellent entry point for understanding AI within the market-leading cloud ecosystem. |

| Microsoft Certified: Azure AI Fundamentals (AI-900) | Microsoft | Foundational knowledge of ML and AI concepts and their implementation on Microsoft Azure. | Beginners and those new to AI on Azure. | A strong competitor, ideal for organizations heavily invested in the Azure cloud. |

| Google Cloud Certified - Generative AI | Fundamentals of generative AI and Google Cloud's generative AI offerings like Vertex AI. | Individuals looking to understand and apply generative AI on Google Cloud. | More focused on generative AI compared to the broader scope of the AIF-C01 and AI-900. | |

| CompTIA AI Essentials | CompTIA | Vendor-neutral foundational AI principles and terminology. | Business professionals, project managers, and marketers. | A good option for a general, non-platform-specific understanding of AI concepts. |

In our tests of the curriculum, the AIF-C01 provides a robust and practical introduction to AI that is immediately applicable in the context of AWS, the most widely used cloud platform.

What are some key AI and ML concepts I need to know?

To succeed in the AIF-C01 exam, you must fully grasp fundamental AI and ML terminology. Here are a few key concepts:

- Artificial Intelligence (AI): The theory and development of computer systems able to perform tasks that normally require human intelligence.

- Machine Learning (ML): A subset of AI that enables a system to learn and improve from experience without being explicitly programmed.

- Deep Learning: A subfield of machine learning based on artificial neural networks with multiple layers.

- Generative AI: A class of AI models that can create new content, such as text, images, or code.

- Foundation Models: Large, pre-trained models that can be adapted for various tasks.

- Prompt Engineering: designing and refining inputs (prompts) to guide a generative AI model to produce the desired output.

- Responsible AI: The practice of developing and deploying AI systems in a fair, unbiased, transparent, and accountable way.

A common mistake is candidates memorizing definitions without understanding the practical implications. Be sure to connect these concepts to the AWS services that implement them.

By Beana Ammanath, a Certified AI Strategist with over a decade of experience implementing cloud-based machine learning solutions.

Sample AIF-C01 questions

Get a taste of the AWS Certified AI Practitioner exam with our carefully curated sample questions below. These questions mirror the actual AIF-C01 exam's style, complexity, and subject matter, giving you a realistic preview of what to expect. Each question comes with comprehensive explanations, relevant documentation references, and valuable test-taking strategies from our expert instructors.

While these sample questions provide excellent study material, we encourage you to try our free demo for the complete AIF-C01 exam preparation experience. The demo features our state-of-the-art test engine that simulates the real exam environment, helping you build confidence and familiarity with the exam format. You'll experience timed testing, question marking, and review capabilities – just like the actual certification exam.

A company needs to implement an AI solution that can convert natural language input into SQL queries for their large-scale database analysis. The solution should be user-friendly for employees with limited technical expertise.

Which AI model would be most appropriate for this use case?

Generative pre-trained transformers (GPT)

Residual neural network

Support vector machine

WaveNet

Generative pre-trained transformers (GPT) is the most suitable solution for this scenario. GPT models excel at understanding and processing natural language input, making them ideal for converting plain English requests into structured SQL queries. They can understand context and intent behind user queries, which is crucial for employees with minimal technical experience.

The practical effectiveness of GPT for this use case has been demonstrated by major companies like Uber, which have successfully implemented GPT-based solutions for SQL query generation in enterprise environments. GPT models have consistently shown superior performance in text-to-SQL tasks compared to other AI models. Furthermore, GPT can handle complex database schemas and generate accurate SQL queries for large-scale data analysis. The model can be fine-tuned to understand specific business contexts and database structures, making it highly adaptable to different enterprise needs.

The other options are not suitable for this specific use case. Residual Neural Network, while powerful for image processing and deep learning tasks, is not specifically designed for natural language understanding and SQL generation. Support Vector Machine is a traditional machine learning algorithm better suited for classification and regression tasks, not complex language processing and query generation. WaveNet is a deep neural network primarily designed for audio generation and speech synthesis, making it inappropriate for text-to-SQL conversion.

When evaluating AI solutions for natural language processing tasks, particularly those involving text transformation or generation, GPT models are often the strongest candidates due to their advanced language understanding capabilities.

When building a prediction model, what's the relationship between underfitting/overfitting and bias/variance?

Underfit models experience high bias. Overfit models experience high variance.

Underfit models experience high bias. Overfit models experience low variance.

Underfit models experience low bias. Overfit models experience low variance.

Underfit models experience low bias. Overfit models experience high variance.

Understanding the relationship between underfitting/overfitting and bias/variance is fundamental in machine learning model development.

Bias refers to the error introduced by approximating a real-world problem, which may be complex, by a too-simple model. High bias means the model makes strong assumptions about the data, leading it to miss relevant relations between features and target outputs. This results in underfitting, where the model performs poorly even on the training data because it cannot capture the underlying trend.

Variance refers to the amount by which the model's learned function would change if it were trained on a different training dataset. High variance means the model is highly sensitive to the specific training data, learning noise and random fluctuations. This leads to overfitting, where the model performs exceptionally well on the training data but fails to generalize to new, unseen data.

Therefore, an underfit model (too simple) suffers from high bias because its fundamental assumptions prevent it from capturing the data's complexity. An overfit model (too complex) suffers from high variance because it learns the training data too specifically, including noise, making it perform poorly on different datasets.

The goal in model building is often described as finding a balance in the bias-variance tradeoff – creating a model that is complex enough to capture the underlying patterns (low bias) but not so complex that it learns the noise (low variance).

Remember these core associations: Underfitting = High Bias (model too simple, makes wrong assumptions) and Overfitting = High Variance (model too complex, learns noise, doesn't generalize). Visualize a simple linear model trying to fit a complex curve (high bias/underfitting) versus a high-degree polynomial wiggling to hit every single training point (high variance/overfitting).

Which SageMaker service helps split data into training, testing, and validation sets?

Amazon SageMaker Feature Store

Amazon SageMaker Clarify

Amazon SageMaker Ground Truth

Amazon SageMaker Data Wrangler

Preparing data for machine learning often involves splitting the dataset into subsets for training, validation, and testing. This ensures that the model is trained on one portion of the data, tuned on another, and finally evaluated on unseen data.

Amazon SageMaker Data Wrangler is designed to simplify the process of data preparation for ML. It provides a visual interface and a comprehensive set of built-in data transformations to help data scientists and engineers aggregate, prepare, and transform data. Among its capabilities, Data Wrangler allows users to apply various transformations, including those needed to split a dataset into training, validation, and testing sets based on specified criteria or proportions.

Amazon SageMaker Feature Store is a repository for storing, retrieving, and managing ML features, but it doesn't perform the splitting operation itself.

Amazon SageMaker Clarify focuses on detecting potential bias in data and explaining model predictions, not on splitting datasets for training.

Amazon SageMaker Ground Truth is a data labeling service used to create labeled datasets, which are often the input for the preparation phase, but it doesn't perform the train/test/validation split.

Think of Data Wrangler as the primary SageMaker tool for preparing raw data before training. This includes cleaning, transforming, and structuring data, which often involves splitting it. Associate Feature Store with managing features, Clarify with bias and explainability, and Ground Truth with labeling.

A company wants to build an interactive application for children that generates new stories based on classic stories. The company wants to use Amazon Bedrock and needs to ensure that the results and topics are appropriate for children.

Which AWS service or feature will meet these requirements?

Amazon Rekognition

Amazon Bedrock playgrounds

Guardrails for Amazon Bedrock

Agents for Amazon Bedrock

Guardrails for Amazon Bedrock provide a mechanism to implement safeguards for generative AI applications. They allow users to define specific policies to control the interaction between users and foundation models (FMs). Key features relevant to this scenario include:

- Denied Topics: Administrators can define topics that the application should not engage with. For a children's application, this could include sensitive or adult themes.

- Content Filters: Guardrails offer configurable filters to detect and block harmful content across categories like hate speech, insults, sexual content, and violence, based on specified thresholds.

- Word Filters: Specific words can be blocked.

- PII Redaction: Personally Identifiable Information can be filtered out.

By configuring Guardrails, the company can enforce content policies consistently across the different FMs available through Bedrock, ensuring the generated stories align with the requirement of being appropriate for children.

Amazon Rekognition is an image and video analysis service and is not used for moderating text generated by Bedrock models.

Amazon Bedrock playgrounds are environments for experimenting with models, not for implementing runtime safety controls in a deployed application.

Agents for Amazon Bedrock enable the creation of applications that can perform tasks using APIs, but they do not inherently provide the content filtering capability required; Guardrails are used to provide safety for agents and direct model invocations.

Therefore, Guardrails for Amazon Bedrock is the appropriate feature to meet the requirement for content safety and appropriateness.

When questions involve ensuring safety, appropriateness, or filtering harmful content in generative AI applications built on AWS, immediately think of Guardrails for Amazon Bedrock. It's the purpose-built feature for implementing these types of policies.

A company needs an AI assistant that can help employees by answering questions, creating summaries, generating content, and securely working with internal company data.

Which Amazon Q solution is designed for this kind of general business use across an organization?

Amazon Q Business

Amazon Q in Connect

Amazon Q Developer

Amazon Q in QuickSight

Amazon Q Business is specifically designed as an AI assistant for work. It can connect to various enterprise data sources, understand company-specific information (like organizational structure, product names, and internal jargon), and help employees with tasks such as answering questions based on internal knowledge bases, summarizing documents, drafting emails, generating reports, and more, all while respecting existing security permissions.

Amazon Q in Connect is tailored for customer service agents using Amazon Connect, helping them with real-time responses and actions during customer interactions.

Amazon Q Developer is focused on assisting developers throughout the software development lifecycle, including code generation, debugging, and optimization, integrated into IDEs and AWS environments.

Amazon Q in QuickSight enhances the business intelligence experience within Amazon QuickSight, allowing users to build dashboards and gain insights using natural language queries.

Given the requirements for a general-purpose assistant for employees across the organization to work securely with internal data for various tasks like Q&A, summarization, and content generation, Amazon Q Business is the appropriate solution.

Amazon Q has several variations tailored to specific use cases. Pay attention to the context described in the question. If it's about general employee productivity and accessing internal business data, think Amazon Q Business. If it's about coding, think Amazon Q Developer. If it's about contact centers, think Amazon Q in Connect. If it's about BI dashboards, think Amazon Q in QuickSight.

A software development company is building generative AI solutions and needs to understand the distinctions between model inference and model evaluation.

Which option best summarizes these differences in the context of generative AI?

Model inference is the process of evaluating and comparing model outputs to determine the model that is best suited for a use case.

Model evaluation is the process of a model generating an output (response) from a given input (prompt).

Both model inference and model evaluation refer to the process of evaluating and comparing model outputs to determine the model that is best suited for a use case

Model evaluation is the process of evaluating and comparing model outputs to determine the model that is best suited for a use case.

Model inference is the process of a model generating an output (response) from a given input (prompt).

Both model inference and model evaluation refer to the process of a model generating an output from a given input.

Understanding the distinction between model inference and model evaluation is crucial when working with generative AI models.

Model Inference: This is the process where a trained foundation model (FM) takes an input, typically called a prompt, and generates an output or response. For example, providing a text prompt like "Write a short story about a friendly robot" to a large language model (LLM) and receiving the generated story is an inference operation. It's the act of using the model to produce results.

Model Evaluation: This involves assessing the performance and quality of a model's outputs to determine its suitability for a specific task or use case. In generative AI, evaluation can be complex and often involves both quantitative metrics (if available) and qualitative assessments (human judgment). This process might compare outputs from different models based on criteria like relevance, coherence, accuracy, safety, or adherence to specific instructions. The goal is to understand how well the model performs and select the best one for the intended application.

Therefore, model evaluation is about judging the model's output quality and suitability, while model inference is the process of the model generating that output.

Remember the flow: You first perform inference (generate output using the model), and then you evaluate that output (assess its quality/suitability). Think of inference as the action of the model and evaluation as the judgment of that action's result.

A company is using a pre-trained large language model (LLM) to build a chatbot for product recommendations. The company needs the LLM outputs to be short and written in a specific language.

Which solution will align the LLM response quality with the company's expectations?

Adjust the prompt.

Choose an LLM of a different size.

Increase the temperature.

Increase the Top K value.

The most direct and common method to guide the behavior of a pre-trained LLM without modifying the model itself is through prompt engineering.

Adjusting the prompt involves carefully crafting the input text given to the LLM. By including explicit instructions within the prompt, the company can guide the model's response. For example, the prompt could be structured like: "You are a helpful chatbot recommending products. Respond in Spanish and keep your answer to one or two sentences. Recommend a product similar to [product name]."

This prompt clearly states the desired constraints: the language (Spanish) and the length (one or two sentences). The LLM will use these instructions to generate an output that aligns with the company's expectations.

Choosing an LLM of a different size might impact overall capability or performance, but it doesn't offer fine-grained control over the output length or language for a specific request.

Increasing the temperature parameter makes the output more random and creative, often leading to longer and less predictable responses, which is contrary to the requirement for short, specific outputs.

Increasing the Top K sampling parameter allows the model to consider more words at each step, increasing diversity but not specifically controlling length or language. It's a method for controlling randomness, similar to temperature.

Remember that prompt engineering is a powerful technique for controlling the output of generative AI models. When requirements involve specific output formats, styles, languages, or lengths, explicitly stating these constraints in the prompt is often the first and most effective approach.

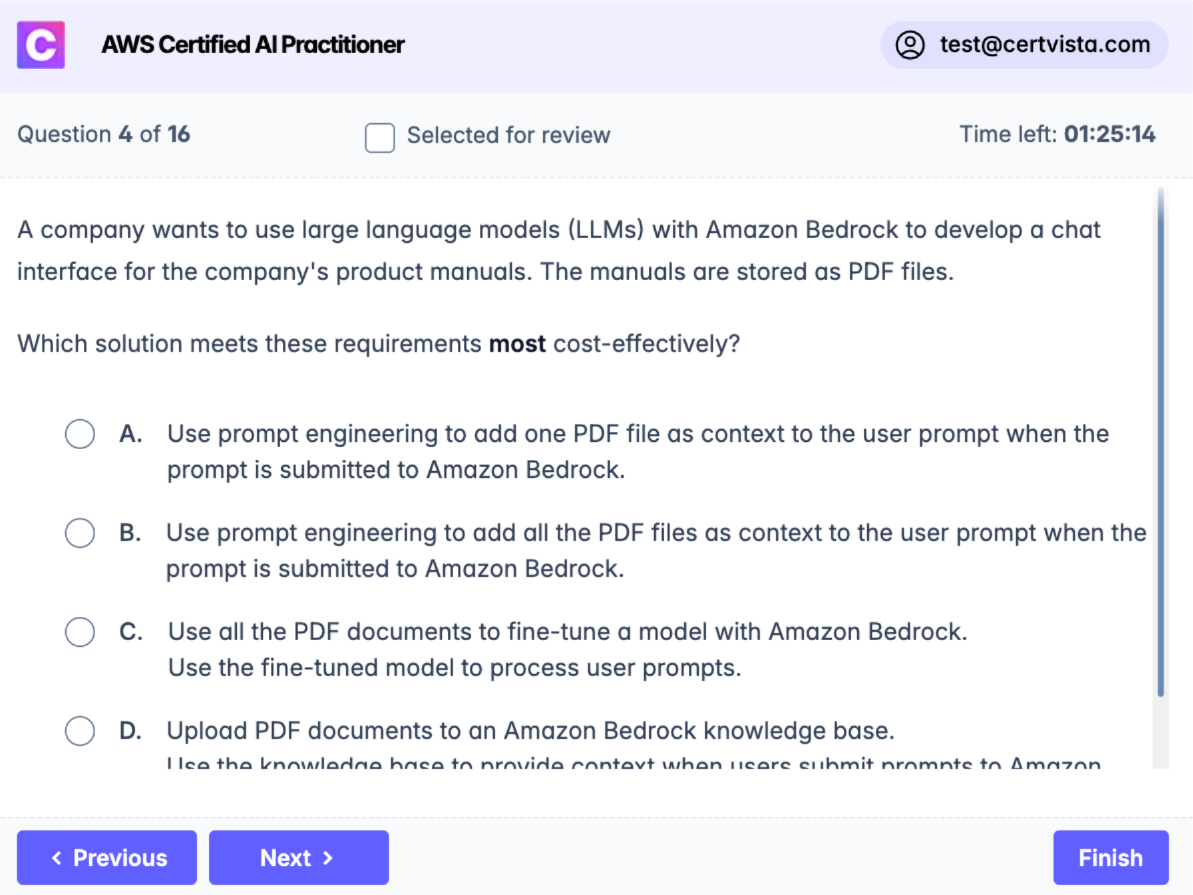

A company wants to use large language models (LLMs) with Amazon Bedrock to develop a chat interface for the company's product manuals. The manuals are stored as PDF files.

Which solution meets these requirements most cost-effectively?

Use prompt engineering to add one PDF file as context to the user prompt when the prompt is submitted to Amazon Bedrock.

Use prompt engineering to add all the PDF files as context to the user prompt when the prompt is submitted to Amazon Bedrock.

Use all the PDF documents to fine-tune a model with Amazon Bedrock. Use the fine-tuned model to process user prompts.

Upload PDF documents to an Amazon Bedrock knowledge base. Use the knowledge base to provide context when users submit prompts to Amazon Bedrock.

Using Amazon Bedrock knowledge base is the most cost-effective solution for this use case. Knowledge bases implement a managed Retrieval Augmented Generation (RAG) architecture that efficiently retrieves relevant information from the uploaded documents when needed. This approach optimizes both performance and cost by only retrieving and using relevant portions of the documents for each query.

The other approaches have significant drawbacks:

Adding a single PDF file as context through prompt engineering would limit the chatbot's ability to access information from other manuals, requiring multiple queries and increasing costs. This would also provide incomplete responses if the information spans multiple manuals.

Including all PDF files as context in every prompt would be highly inefficient and expensive. This approach would unnecessarily increase token usage and processing costs for each query, even when only a small portion of the documentation is relevant.

Fine-tuning the model with all PDF documents would be the most expensive option. It requires significant computational resources and typically takes longer compared to other approaches. Additionally, updating the model with new or modified documentation would require repeated fine-tuning, increasing costs further.

When evaluating solutions involving document processing with LLMs, consider both the immediate implementation costs and long-term operational efficiency. Knowledge bases often provide the best balance between functionality and cost-effectiveness for document-heavy applications.

A company wants to use a large language model (LLM) to develop a conversational agent. The company needs to prevent the LLM from being manipulated with common prompt engineering techniques to perform undesirable actions or expose sensitive information.

Which action will reduce these risks?

Create a prompt template that teaches the LLM to detect attack patterns.

Increase the temperature parameter on invocation requests to the LLM.

Avoid using LLMs that are not listed in Amazon SageMaker.

Decrease the number of input tokens on invocations of the LLM.

Creating a prompt template that teaches the LLM to detect attack patterns is the correct approach. This method provides a robust defense mechanism against prompt injection attacks. Well-designed prompt templates with security guardrails can detect and prevent various attack patterns, including prompted persona switches, attempts to extract prompt templates, and instructions to ignore security controls.

The template can incorporate specific guardrails that validate input, sanitize prompts, and establish secure communication parameters. This approach is particularly effective because it addresses security at the foundational level of the LLM's interaction with users, creating a first line of defense against malicious inputs.

As for the incorrect options, increasing the temperature parameter would actually make the model's outputs less predictable and potentially more vulnerable to manipulation. Limiting LLM selection to those listed in SageMaker doesn't address the core security concerns, as security depends on implementation rather than the model source. Reducing input tokens is an ineffective approach since sophisticated attacks can be executed with minimal tokens while this restriction would unnecessarily limit the model's legitimate functionality.

When evaluating LLM security measures, focus on solutions that directly address the specific security concern at the interaction level rather than general model parameters or arbitrary restrictions.

A social media company wants to use a large language model (LLM) for content moderation. The company wants to evaluate the LLM outputs for bias and potential discrimination against specific groups or individuals.

Which data source should the company use to evaluate the LLM outputs with the least administrative effort?

User-generated content

Moderation logs

Content moderation guidelines

Benchmark datasets

Benchmark datasets are the most efficient choice for evaluating LLM outputs for bias and discrimination with minimal administrative effort. These datasets are specifically designed and curated by experts to test for various types of biases and discriminatory patterns in language models. They provide a standardized, ready-to-use approach for performance assessment that requires minimal setup and administration.

Benchmark datasets offer several advantages for bias evaluation:

- They are pre-validated and systematically curated to cover various dimensions of bias and discrimination

- They provide consistent, reproducible results for measuring model performance

- They come with established evaluation metrics and protocols, reducing the need for custom evaluation framework development

The other options would require significantly more administrative effort:

User-generated content would require extensive preprocessing, labeling, and validation before it could be used for bias evaluation. It would also need careful sampling to ensure comprehensive coverage of different bias types and edge cases.

Moderation logs, while valuable for real-world insights, would need substantial cleaning, standardization, and annotation to be useful for systematic bias evaluation. They might also contain inconsistencies in moderation decisions that could complicate the assessment process.

Content moderation guidelines are reference documents rather than evaluation tools. Using them for bias assessment would require creating custom evaluation frameworks and test cases, demanding significant administrative overhead.

Frequently Asked Questions

Yes, absolutely. The CLF-C01 exam is deceptively broad, requiring you to quickly differentiate between dozens of similar AWS services, pricing models, and support plans in specific scenarios—a common stumbling block. From our experience, CertVista's practice exams are the most effective tool to master this skill. Our realistic test engine simulates the actual exam environment, moving you beyond simple memorization to train the critical ability of applying knowledge correctly under pressure, which is the key to passing.

Based on performance data from thousands of successful users, the clear benchmark for success is consistently scoring 85% or higher across multiple CertVista practice exams. Achieving this score indicates you have a firm grasp of the concepts and can apply them effectively under timed conditions. It proves you are truly ready and strongly correlates with a first-time pass on the official exam.

CertVista provides a professional preparation tool where free quizzes fall short. The key differences are our realism, with questions that meticulously mirror the CLF-C01's scenario-based format, and our in-depth explanations for every answer choice—correct and incorrect—which transforms practice into a powerful learning tool. We also guarantee 100% coverage of all official exam domains and provide performance analytics to pinpoint your weak areas, ensuring your study time is as efficient as possible.

The single biggest mistake we see is candidates confusing the specific use cases for similar AWS services (e.g., AWS Inspector vs. AWS Trusted Advisor). CertVista's practice exams are specifically engineered to solve this problem. We immerse you in realistic, scenario-based questions that force you to make these critical distinctions repeatedly until the unique purpose of each service becomes second nature, eliminating the confusion that causes many to fail.

The CLF-C01 exam primarily uses multiple-choice and the more challenging multiple-response formats, both of which are extensively covered in our CertVista test engine. While other formats like matching or ordering are rare, our preparation methodology inherently prepares you for them. By focusing on the relationships between services, use cases, and best practices, our scenario-based questions and detailed explanations build a core competency that allows you to analyze and correctly answer any question format AWS might present.